This post was written for MachineLearningForKids.co.uk/stories: a series of stories I wrote to describe student experiences of artificial intelligence and machine learning, that I’ve seen from time I spend volunteering in schools and code clubs.

Students can make a Scratch project to play Rock, Paper, Scissors. They use their webcam to collect example photos of their hands making the shapes of ‘rock’ (fist), ‘paper’ (flat hand), and ‘scissors’ (two fingers). Then they use those photos to train a machine learning model to recognise their hand shapes.

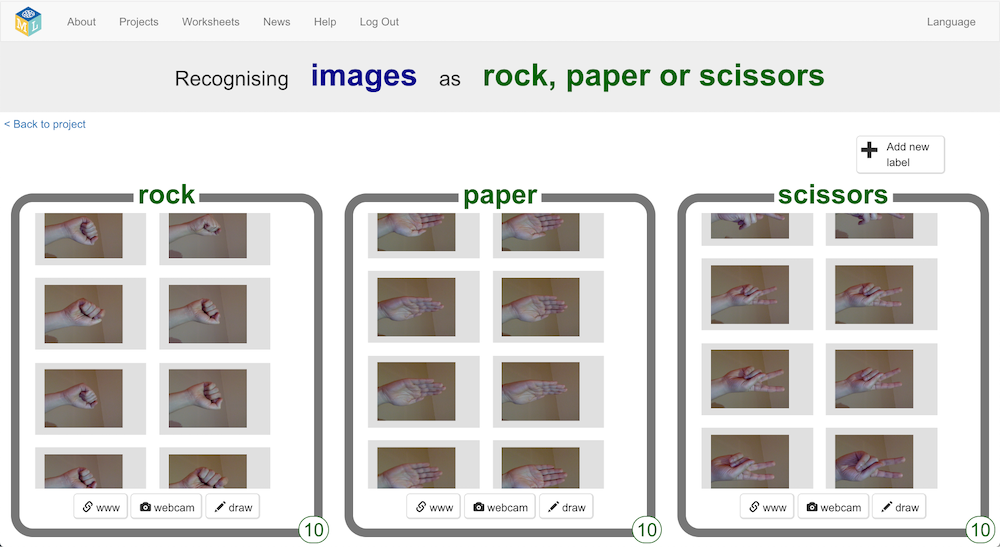

I often have at least one enthusiastic (or impatient!) student keen to create their machine learning model as quickly as possible. They’ll hold their hand fairly still in front of the webcam, and keep hitting the camera button. The result is that they’ll take a large number of very similar photos.

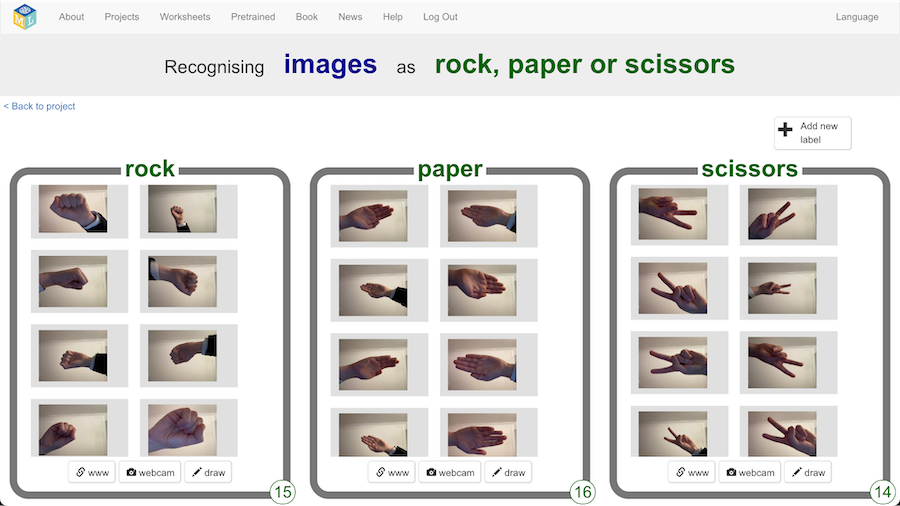

Other students will naturally create a range of different photos. They’ll take some photos of their left hand, and some photos of their right hand. They’ll take some photos of their hand held very close to the webcam looking large in the photo, and they’ll take some photos of their hand held far away from the webcam looking small. They’ll take photos of their hand in a variety of directions, and from a variety of angles.

If left to experiment and encouraged to compare their projects, students will notice differences in the way that their different projects behave.

Projects trained with a wider variety of training examples often perform better. Their models make fewer mistakes and have a higher confidence score for hand shapes they recognise correctly.

Projects trained with a very similar set of training examples make more mistakes, particularly when students play each other’s Rock, Paper, Scissors holding with their hand in a position or at an angle that was different to the way it was trained.

Letting students try each other’s project allows them to see that machine learning models trained with a wider variety of training examples perform better.

Tags: machine learning, scratch