This post was written for MachineLearningForKids.co.uk/stories: a series of stories I wrote to describe student experiences of artificial intelligence and machine learning, that I’ve seen from time I spend volunteering in schools and code clubs.

Some of the best lessons I’ve run have been where a machine learning model did the “wrong” thing. Students learn a lot from seeing an example of machine learning not doing what we want.

Perhaps my favourite example of when something went wrong was a lesson that I did on Rock, Paper, Scissors.

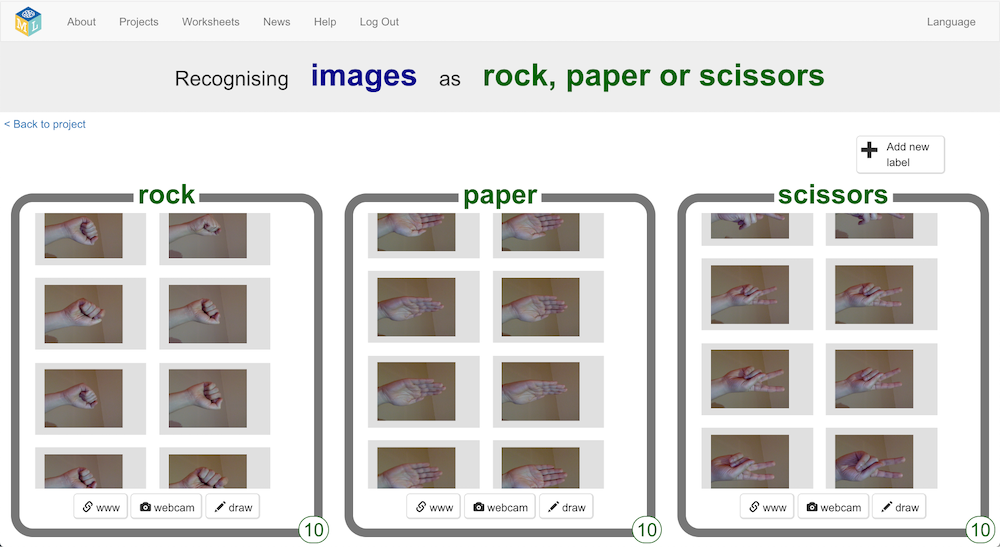

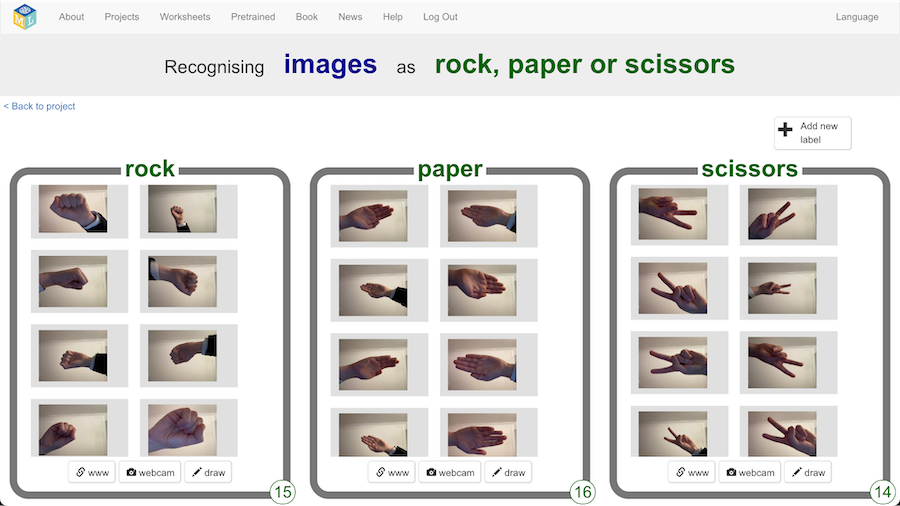

Students make a Scratch project to play Rock, Paper, Scissors. They use their webcam to collect example photos of their hands making the shapes of rock (fist), paper (flat hand), and scissors (two fingers) – and use those photos to train a machine learning model to recognise their hand shapes.

It this particular lesson, the project worked really nicely for nearly all the students. There was one student where things went a little bit wrong.

This student had collected a decent set of training photos of their hand in a ‘rock’ shape. And a good set of training photos of their hand making a ‘scissors’ shape.

While they were taking their training photos of their hand making the ‘paper’ shape, one of their friends in the class had come over to chat to them.

Their friend was stood next to them while they were taking nearly all of these photos. And because of the way their webcam was angled, their friend was visible in nearly all the ‘paper’ training photos.

None of us spotted that at first. None of us thought anything of it.

Their machine learning model learned that a photo of a hand meant ‘rock’ or ‘scissors’, depending on the hand shape.

But it learned that a photo of a hand and a person meant ‘paper’.

We only noticed this when the student started playing Rock, Paper, Scissors against the computer. When his teacher or I came around to see how he was getting on and stood by him, his machine learning model kept reporting that he was doing ‘paper’, whatever hand shape he made.

For example, he’d have his hand in a ‘rock’ shape, but if we were stood by him, the machine learning model reported that he was doing ‘paper’. When we stepped away, it correctly recognised he was doing ‘rock’.

It took us a little while to work out what was going on. When we eventually spotted what had happened, it became a fantastic, albeit unplanned, lesson for the class.

I think they learned more from that one student’s project than anything I had planned for the lesson.

Machine learning models learn from patterns in the training data that we give them, whether or not those are patterns we’d intended to give.

Because seeing things go wrong is such a useful experience for students, I don’t always rely on it to happen in an unplanned way. Sometimes I have to resort to planting a trap if there’s something I want students to see happen.

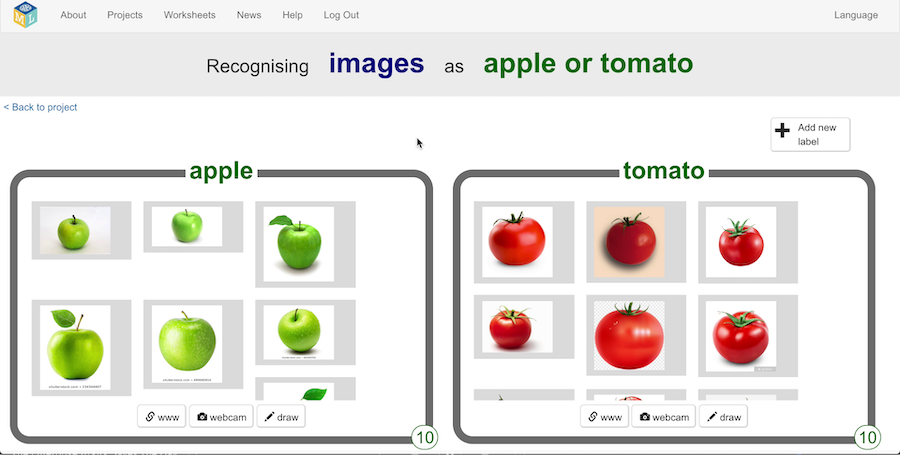

For example, in one lesson, I helped students train a machine learning model to recognise whether a photo shows an apple or a tomato.

I told them that I was saving them time by pointing them at where they could find images to use for training.

You can probably see what I was planning with this from the screenshot: I’d pointed them at photos of green apples and red tomatoes.

They used these to train a machine learning model.

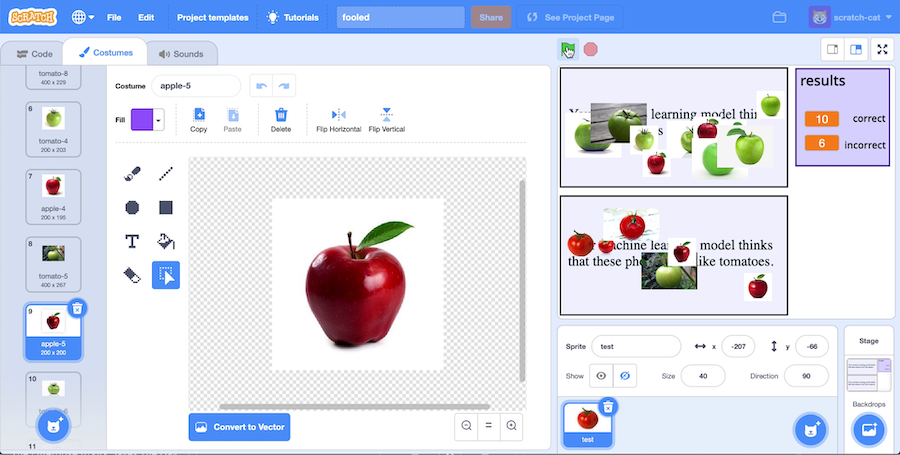

I helped them to make a test in Scratch – something that would take a new set of test photos and sort them into two groups: apples and tomatoes.

Again, I told them that I was being helpful, and I gave them a set of test photos to use. But I gave them a bunch of photos of red apples, and (unripe) green tomatoes.

Predictably, their machine learning models made many mistakes.

Their models had learned that red objects are normally tomatoes, and green objects are normally apples. Because that’s what we had taught it. But that isn’t always true.

That was a little contrived, but it was still useful. It got the students thinking about how things can go wrong and the things we can do to try and avoid that.

Examples of this sort of mistake are sometimes reported in the media, such as an image classifier trained to recognise skin tumours that accidentally learned to recognise rulers in medical photos. Those are useful lessons, but seeing this happen for themselves gave that class a unique insight, whether it happens organically or is orchestrated and planned.

Machine learning models learn from patterns in the training data that we give them, whether or not those are patterns we’d intended to give.

Tags: machine learning, scratch