Last week, I came up with a quick hack, explained quite neatly by @crouchingbadger:

Dale Lane’s TV watches him. It knows if he’s happy or surprised or sad. This is amazing. dalelane.co.uk/blog/?p=2092 (via @libbymiller)

— Ben Ward (@crouchingbadger) April 13, 2012

It was a bit of fun, even if it did seem to convince a group of commenters on engadget that I was a rage-fuelled XBox gamer. 🙂

There’s one big limitation with the hack, though: I don’t spend that much of my day in front of the TV.

It’s interesting to use it to measure my reactions to specific TV programmes or games. But thinking bigger, it’d be cool to try a hack that monitors me throughout the day to measure what kind of day I’m having.

I don’t spend much time in front of the TV, but I do spend a *lot* of time in front of my Macbook. And it has a camera, too!

What if my MacBook could look out for my face, and whenever it can see it, monitor what facial expression I have and whether I’m smiling? And while I’m at it, as I’ve been playing with sentiment analysis recently, add in whether the tweets I post sound positive or neutral.

Add that together, and could I make a reasonable automated estimate as to whether I’m having a good day?

I couldn’t reuse the same Python to control the webcam that I did for the TV. My best way to control the iSight camera on my MacBook seems to be to use QuickTime Java [1].

Here is the capture code:

package com.dalelane.happiness;

import java.io.File;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import quicktime.QTException;

import quicktime.QTSession;

import quicktime.io.QTFile;

import quicktime.qd.Pict;

import quicktime.qd.QDGraphics;

import quicktime.qd.QDRect;

import quicktime.std.StdQTConstants;

import quicktime.std.StdQTException;

import quicktime.std.image.GraphicsExporter;

import quicktime.std.sg.SGVideoChannel;

import quicktime.std.sg.SequenceGrabber;

import com.github.mhendred.face4j.DefaultFaceClient;

import com.github.mhendred.face4j.FaceClient;

import com.github.mhendred.face4j.exception.FaceClientException;

import com.github.mhendred.face4j.exception.FaceServerException;

import com.github.mhendred.face4j.model.Face;

public class HappinessMonitor {

// constants for grabbing a photo

private final static int PICTURE_WIDTH_PX = 900;

private final static int PICTURE_HEIGHT_PX = 600;

private final static String PICTURE_TEMP_FILE_PATH = "/tmp/happinessmonitorcameragrab.jpg";

// constants for how often to run

private final static int POLLING_FREQUENCY_MS = 2000;

// constants for face.com API

private final static String FACECOM_API_KEY = "this-is-my-key-get-your-own";

private final static String FACECOM_API_SECRET = "this-is-my-key-get-your-own";

private final static String FACECOM_DALELANE_TAG = "dalelane@dale.lane";

// constants for SQLite used to persist data

private final static String SQLITE_DB_PATH = "log.db";

public static void main(String[] args){

HappinessMonitor monitor = new HappinessMonitor();

monitor.start();

}

private SGVideoChannel channel;

private PreparedStatement insertStatement;

public void start(){

SequenceGrabber grabber = null;

Connection dbConnection = null;

try {

// prepare camera

grabber = initialiseCamera();

// prepare client for face.com REST API

FaceClient faceClient = new DefaultFaceClient(FACECOM_API_KEY, FACECOM_API_SECRET);

// prepare database for storing face.com results

dbConnection = connectToDB();

while (true){

// take a picture with the iSight webcam camera

File imagedata = takePicture(grabber);

// upload to face.com

if (imagedata != null){

Face face = null;

try {

face = faceClient.recognize(imagedata, FACECOM_DALELANE_TAG).getFace();

}

catch (FaceClientException e) {

e.printStackTrace();

}

catch (FaceServerException e) {

e.printStackTrace();

}

// persist response from face.com

if (face != null){

storeFaceInformation(face);

}

}

// wait a few seconds before doing this again

Thread.sleep(POLLING_FREQUENCY_MS);

}

}

catch (QTException e) {

e.printStackTrace();

}

catch (InterruptedException e) {

e.printStackTrace();

}

catch (SQLException e) {

e.printStackTrace();

}

catch (ClassNotFoundException e) {

e.printStackTrace();

}

finally {

closeCamera(grabber);

cleanupTempFiles();

disconnectFromDB(dbConnection);

}

}

private SequenceGrabber initialiseCamera() throws QTException {

// initialise quicktime java

QTSession.open();

// create the image grabber

SequenceGrabber seqGrabber = new SequenceGrabber();

// prepare video channel

QDRect bounds = new QDRect(PICTURE_WIDTH_PX, PICTURE_HEIGHT_PX);

QDGraphics world = new QDGraphics(bounds);

seqGrabber.setGWorld(world, null);

channel = new SGVideoChannel(seqGrabber);

channel.setBounds(bounds);

// return grabber

return seqGrabber;

}

private void closeCamera(SequenceGrabber grabber){

if (QTSession.isInitialized()){

if (grabber != null && channel != null){

try {

grabber.disposeChannel(channel);

}

catch (StdQTException e) {

e.printStackTrace();

}

}

}

QTSession.close();

}

private File takePicture(SequenceGrabber seqGrabber) throws QTException {

// prepare channel

final QDGraphics world = new QDGraphics(channel.getBounds());

seqGrabber.setGWorld(world, null);

channel.setBounds(channel.getBounds());

// grab picture

seqGrabber.prepare(false, true);

final Pict picture = seqGrabber.grabPict(channel.getBounds(), 0, 1);

// finished with grabber for the moment

seqGrabber.idle();

// convert the picture to something we can use

File jpeg = convertPictToImage(picture);

// cleanup

world.disposeQTObject();

return jpeg;

}

private File convertPictToImage(Pict picture) throws QTException {

// use a graphics exporter to convert a quicktime image to a jpg

GraphicsExporter exporter = new GraphicsExporter(StdQTConstants.kQTFileTypeJPEG);

exporter.setInputPicture(picture);

QTFile file = new QTFile(PICTURE_TEMP_FILE_PATH);

exporter.setOutputFile(file);

int filesize = exporter.doExport();

exporter.disposeQTObject();

// check if it was successful before returning

File jpeg = null;

if (filesize > 0){

jpeg = new File(PICTURE_TEMP_FILE_PATH);

}

return jpeg;

}

private void cleanupTempFiles() {

File imgfile = new File(PICTURE_TEMP_FILE_PATH);

if (imgfile.exists()){

imgfile.delete();

}

}

private Connection connectToDB() throws SQLException, ClassNotFoundException {

// connect to DB

Class.forName("org.sqlite.JDBC");

Connection dbConn = DriverManager.getConnection("jdbc:sqlite:" + SQLITE_DB_PATH);

// ensure a table exists to store data

dbConn.createStatement().execute("CREATE TABLE IF NOT EXISTS facelog(ts timestamp unique default current_timestamp, isSmiling boolean, smilingConfidence int, mood text, moodConfidence int)");

// create preparedstatement for inserting readings

insertStatement = dbConn.prepareStatement("INSERT INTO facelog(isSmiling, smilingConfidence, mood, moodConfidence) values(?, ?, ?, ?)");

// return data

return dbConn;

}

private void storeFaceInformation(Face face) throws SQLException {

// insert the mood and smiling info from the face.com API response into local DB

insertStatement.setBoolean(1, face.isSmiling());

insertStatement.setInt(2, face.getSmilingConfidence());

insertStatement.setString(3, face.getMood());

insertStatement.setInt(4, face.getMoodConfidence());

insertStatement.executeUpdate();

}

private void disconnectFromDB(Connection conn){

if (conn != null){

try {

conn.close();

}

catch (SQLException e) {

e.printStackTrace();

}

}

}

}

It’s using a different camera API, there are syntax changes in the move from Python to Java, I’m using a different client library for face.com, and I’ve dumped the stuff that was storing information about faces other than mine. But other than that, it’s much the same as the code that I shared last week.

It’s still dumping the results from the face.com API calls in a local SQLite database.

I’ve only just written this, so it’s not been running long enough to capture much data. I’ll run it for a while then come back and take a look.

I don’t seem to be doing well at looking for patterns in personal data recently, but I’m curious to see stuff like:

- whether I seem more cheerful in different times of day (I’m really not a morning person)

- whether I typically look more cheerful on a Friday than a Monday (should be a safe bet),

- whether I look more positive when the guy I share an office with is in compared with when I’ve got the office to myself

- and so on

If I get a chance, I’m also curious to see what other data sources I can combine it with. For example, my scrobbles from last.fm. If I’m in front of my MacBook, I probably have my earphones in and Spotify running. Using the last.fm API, could I see whether certain types of music effect the mood reflected by my facial expression?

Lots of stuff to try once I’ve got more data.

In the meantime, here is the data from today.

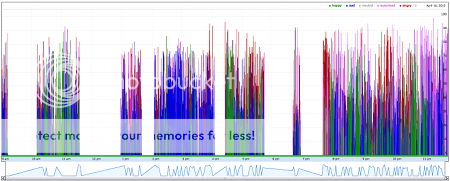

Like before, an interactive time-series chart showing the mood that my facial expression was classified as. The y-axis shows the level of confidence that the classifier had in it’s evaluation, which I assume probably has some relationship with how strong the expression was.

And a screenshot for the Flash-deprived:

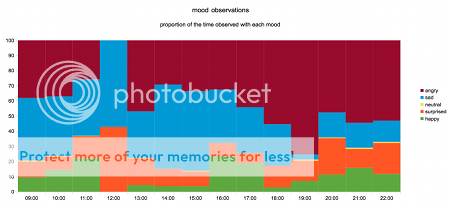

Alternatively, we can show the proportion of observations that were classified in each overall expression type:

Incidentally, given the reactions to my last post, it’s worth me repeating this:

I copied the labels from the face.com API. “angry” is a pretty broad bucket that covers a range of expressions including frustration, determination, etc.

I wouldn’t read too much into the pejorative labels. I’m not an angry person, honest 🙂

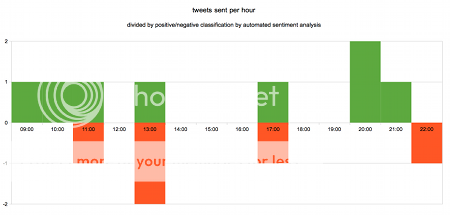

How does this compare to my mood as expressed on twitter?

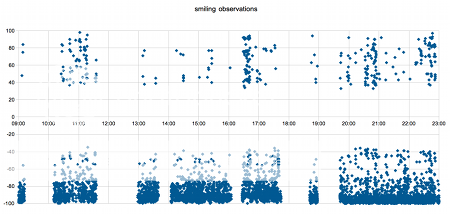

As well as mood, we can look at how many times the code observed me smiling.

Again, we can use the y-axis to show the confidence that the classifier had in identifying whether I was smiling or not. 100% means it was certain that I was smiling, which probably means that I had a big smile. 10% means that it wasn’t very certain, which perhaps means that I had a very small smile.

I’m showing smiling as positive values on the y-axis, and not-smiling as negative values on the y-axis.

Do I not smile very much? 😉

It looks even worse when you show the results as a proportion of time observed smiling versus not-smiling.

To conclude…. this was a massively over-engineered, unnecessarily complicated way to answer the question: has today been a good day?

The answer, incidentally, is yes, it was okay, thanks.

[1]

Yes, I know QuickTime Java has been deprecated. But I didn’t have the time to learn Objective-C just to try a quick hack, and despite being deprecated, QuickTime Java does – for now – still work.

Very interesting Dale. Thankyou for the post. Just like you said, it would be interesting to use it on some of the people visiting the demo lab and showing it to them at the end of the lab visit.

Hey Dale,

As this is an extension of your previous work at home I assume it’s still taking the highest ranked “emotion” it detects. I’d be fascinated to see a comparison between a still shot and an avatar mixing all the “emotion” values and see how accurrate that is. If it’s accurrate enough then it might be a nice way of representing some of the data, showing the world how you currently feel without exposing anything confidential in the background and of course amusing for the general ridiculous faces the avatar will inevitably come up with

Also do you reckon (not looking for numbers to quantify) you’re getting a more accurate representation with a better camera, you being closer and polling 2/3 more often?

Rich – That’s an interesting idea… will have a look for an easy way to generate avatars. As for whether the Mac camera pics make for more accurate data? Dunno… instinctively it seems like it should, but I’ve not seen anything obvious in the data to back that up.