I’ve moved a couple of bits of Machine Learning for Kids into OpenWhisk functions. In this post, I’ll describe what I’m trying to solve by doing this, and what I’ve done.

Background

I’ve talked before how I implemented Machine Learning for Kids, but the short version is that most of it is a Node.js app, hosted in Cloud Foundry so I can easily run multiple instances of it.

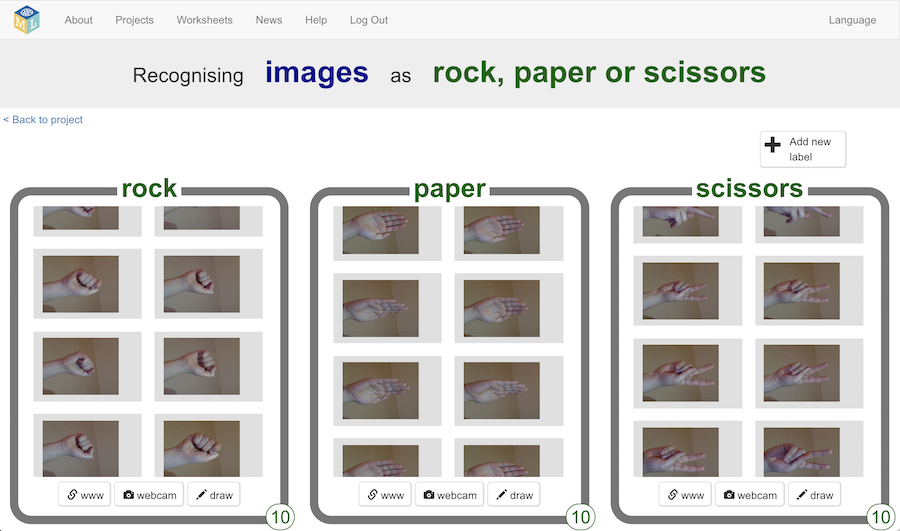

The most computationally expensive thing the site has to do is for projects that train a machine learning model to recognize images.

In particular, the expensive bit is when a student clicks on the Train new machine learning model button for a project to train the computer to recognize images.

When they do that, at a high level, this is what has to happen:

- All of their images will be downloaded

- Images they created themselves (e.g. images they’ve drawn, photos they’ve taken with the webcam, etc.) will be retrieved from Cloud Object Storage

- Images they found on the web (which I only store as URLs) are downloaded from their original websites

- Downloaded images will be resized to a size suitable for training (images created and stored in Cloud Object Storage will already be the right size)

- A new zip file is created with all of the images, ready for uploading to the Watson Visual Recognition service (sometimes more than once using different Watson credentials)

The problems

Compared with everything else that the site has to do, resizing large images and creating large zip files are both fairly expensive in terms of memory and CPU.

This is compounded by the fact that it tends to happen in bursts.

If I’m unlucky, a class of thirty students all click their Train ML button at roughly the same time.

When I’m really unlucky, a STEM event of a hundred students all click Train ML button at the same time!

(Teachers often get their classes to go through projects together, so they all move on to the next step at the same time. It is more common than you’d expect for dozens of training requests to hit my site at once).

This means I had two problems:

Problem 1 : The site was horribly over-provisioned

The rest of the site server is efficient and light-weight, using hardly any CPU, memory or local disk.

But I had to provision it to cope with the worst possible spikes without crashing with out of memory errors. So that means I scaled up the memory footprint of the site instances to a level that has been far more than the site has needed most of the time.

I’m running the site on a shoestring budget, so I can’t afford to run it with far more resource than it needs!

Problem 2 : I had to throttle it to be slower than it could be

To cope with the worst spikes without blowing up with out-of-memory errors, I was fairly aggressive about avoiding parallelising the task described above.

For example, instead of processing all of a student’s images at once in parallel, I’d limit it to do a couple at a time. That wasn’t necessary if only one or two students had clicked the Train button – it would’ve worked okay to just do lots, if not all, of their images in parallel. But to protect against a possible spike of loads of students, I was doing it slower for everyone.

Training image models is already annoyingly slow. Making it even slower isn’t great for a tool used by classes of impatient children!

Both of these problems were only getting worse.

The site continues to get busier, and I’m getting more and more schools and code clubs using the site. I was rapidly approaching a point where I’d have to scale up the site app instances even more to avoid the risk of out-of-memory errors.

The solution

You can probably guess from the fact that I’ve been talking about OpenWhisk recently (or just the title and intro to this post!) that I think these problems are well suited to serverless.

- It’s a very spiky workload.

- It’s infrequent.

- When it happens, it is resource intensive – an order of magnitude more than the usual base workload level.

- Demand typically comes in bursts.

- The processing is well suited to being parallelised.

This is just the sort of thing that I’ve talked about as being ideal for serverless.

I decided to move the task of creating training data zip files for image projects to a couple of new OpenWhisk functions.

That means that now when a student clicks on the Train new machine learning model button…

- The main Machine Learning for Kids API server will collect the list of training data images from the training data database

- The list of training images is POSTed to an OpenWhisk function called

CreateZip. - The

CreateZipfunction invokes a second function,ResizeImage, for every image that needs to be downloaded and resized. - The

CreateZipfunction creates a zip file with the responses from each of theResizeImagefunction invocations, and returns it. - The main Machine Learning for Kids API server submits it to the Watson Visual Recognition service

Splitting it into two functions means I don’t need to throttle the image resizing. I can do all of them in parallel, without needing to scale the CreateZip function to cope with it, because each image will be handled by a separate function instance of ResizeImage.

The code

So that was it. I’ve been tinkering with this off-and-on for a week. Look at the pull request with the initial implementation to see more about how I approached it.

I’m assuming it’ll change a bit over the first few weeks as I learn about all the things I did wrong (I’m still fairly new to OpenWhisk!) so you can find the current version of the functions in the serverless-functions folder in github.com/IBM/taxinomitis.

I wrote it in TypeScript for consistency with the rest of the site, and it wasn’t without challenges.

For example, the previous implementation for resizing images in the main site app used sharp.

sharp has some native code bits that I couldn’t figure out how to get into a Node.js function. The OpenWhisk Node.js runtime already includes imagemagick so I switched to using gm for the serverless implementation. It’s noticeably slower, so that’s frustrating.

Plus it was a little fiddly to package. I ended up using webpack to get into a state that would fit with OpenWhisk. The openwhisk-typescript project was super useful in learning how to do it. But arguably this could’ve been better implemented in a different language that can do image processing faster.

No-one will notice!

That’s it. I’ve protected against future growth of the site, and hopefully reduced my hosting costs a bit. But if I’ve done this right, no-one will notice any difference. 🙂

Tags: mlforkids-tech, openwhisk