In this post, I want to describe how to use AsyncAPI to document how you’re using Apache Kafka. There are already great AsyncAPI “Getting Started” guides, but it supports a variety of protocols, and I haven’t found an introduction written specifically from the perspective of a Kafka user.

I’ll start with a description of what AsyncAPI is.

“an open source initiative … goal is to make working with Event-Driven Architectures as easy as it is to work with REST APIs … from documentation to code generation, from discovery to event management”

The most obvious initial aspect is that it is a way to document how you’re using Kafka topics, but the impact is broader than that: a consistent approach to documentation enables an ecosystem that includes things like automated code generation and discovery.

| what Kafka calls | AsyncAPI describes as |

|---|---|

| broker | message broker |

| producer | publisher |

| consumer | subscriber |

| event / record / message | message |

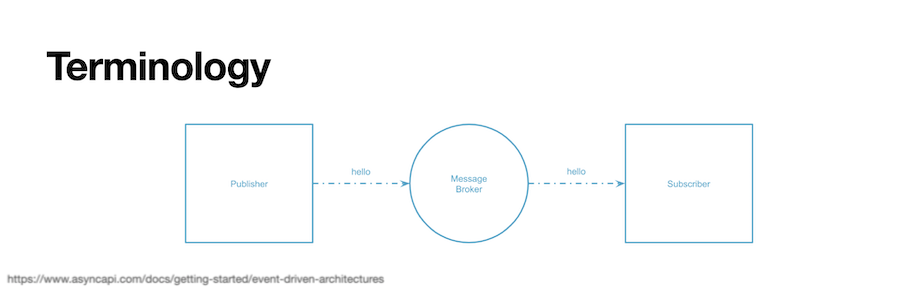

| topic | channel |

Most terminology in AsyncAPI will be recognizable to Kafka users. Describing Kafka Producers as “publishers” and Kafka Consumers as “subscribers” isn’t that unusual, and something I’ve heard people do before, particularly for users who’ve come from messaging worlds like JMS.

Perhaps more unexpected is that, in AsyncAPI, Kafka topics are described as “channels”.

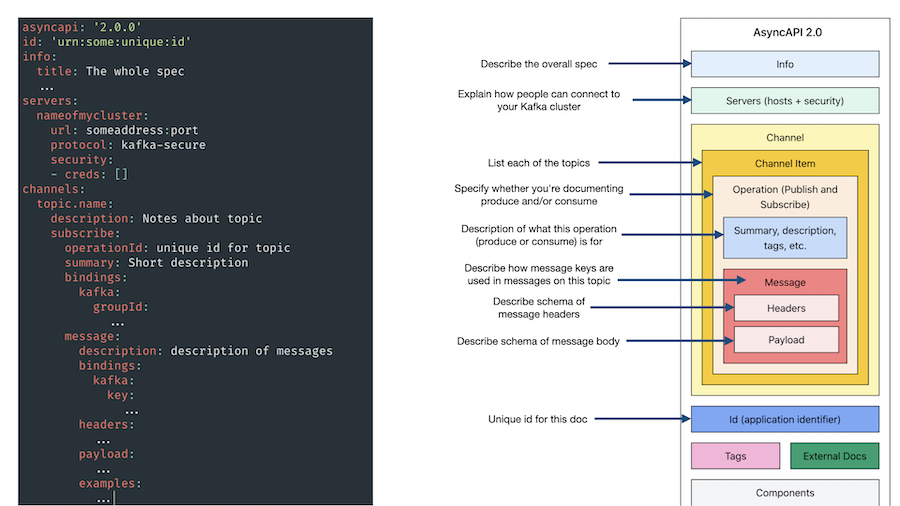

AsyncAPI specs are a YAML file (or you can use JSON if you prefer), that formally documents how to connect to the Kafka cluster, the details of the Kafka topic(s), and the type of data in the messages on the topics. It includes both formal schema definitions and space for free-text descriptions.

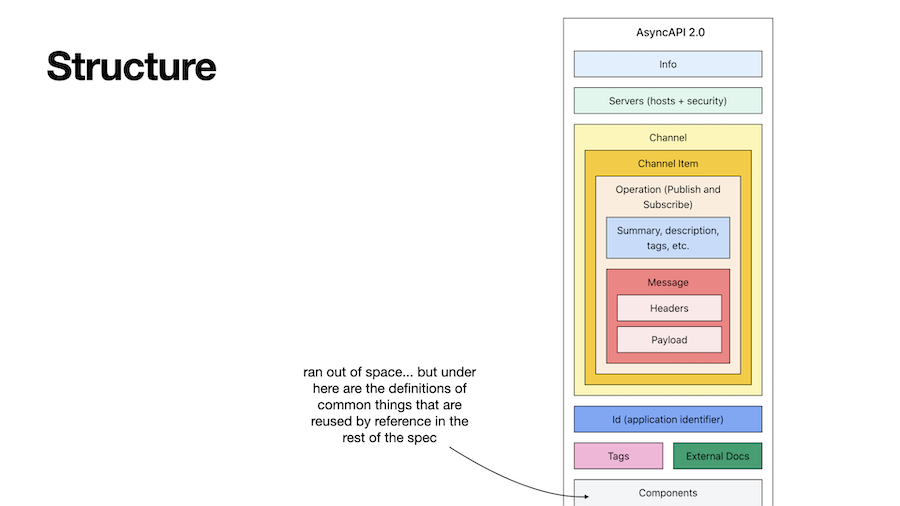

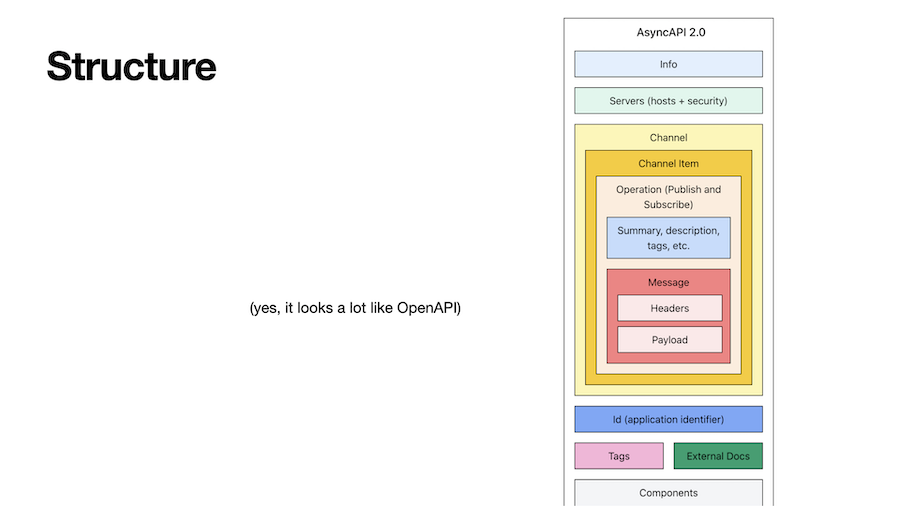

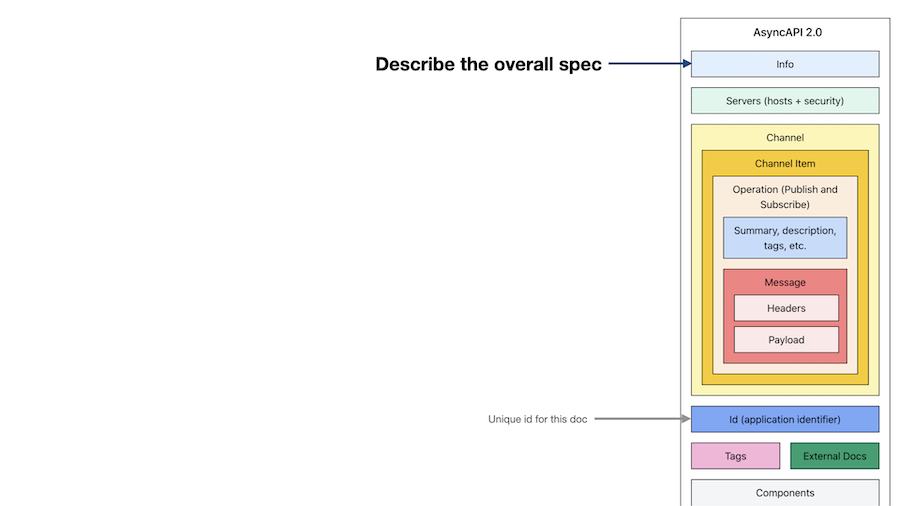

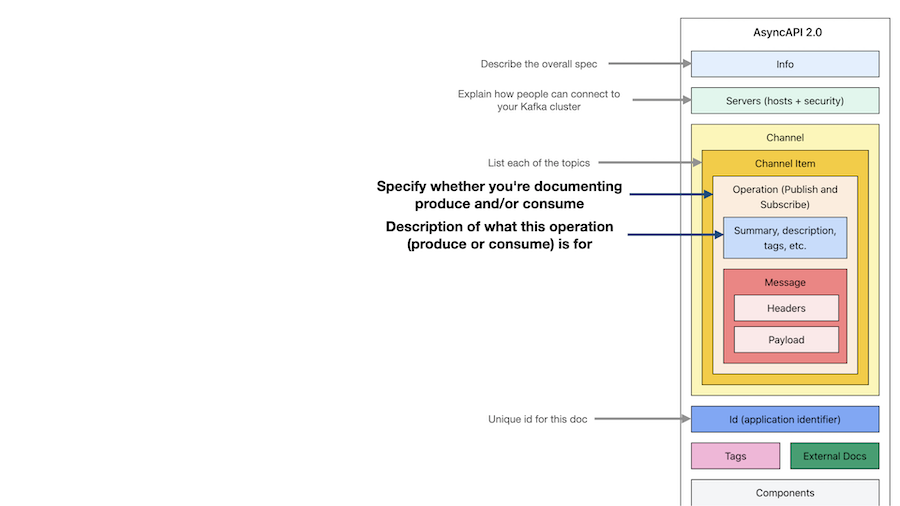

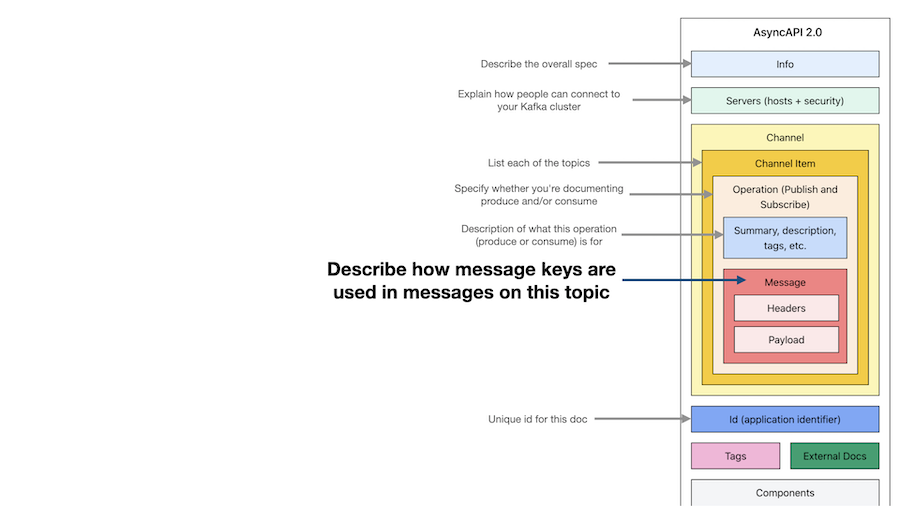

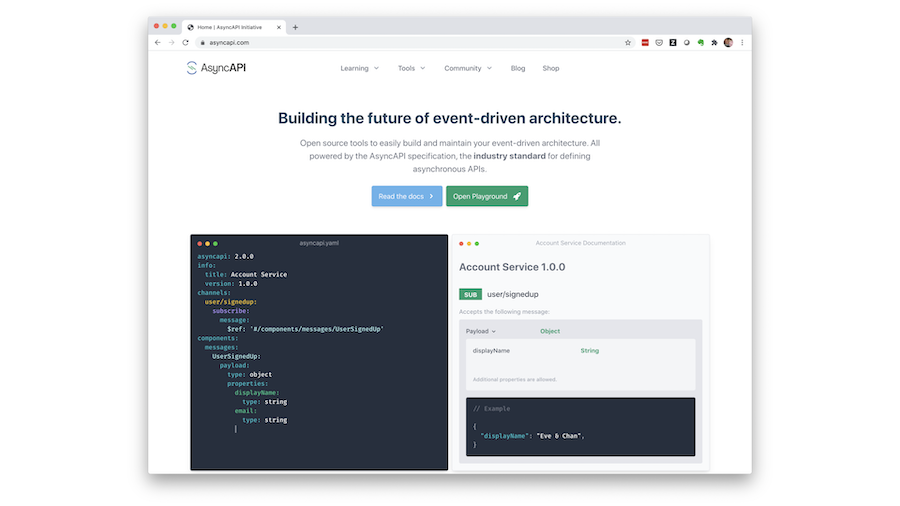

This graphic is used on the AsyncAPI web site to describe the structure of an AsyncAPI spec.

It’s a bit taller than I’m showing here, but these are the important sections.

The bottom bits in “Components” are where you can put definitions that can be reused by reference throughout the rest of your spec.

For this post, I want to go through the different sections in this structure, and describe what they mean through the lens of documenting Kafka.

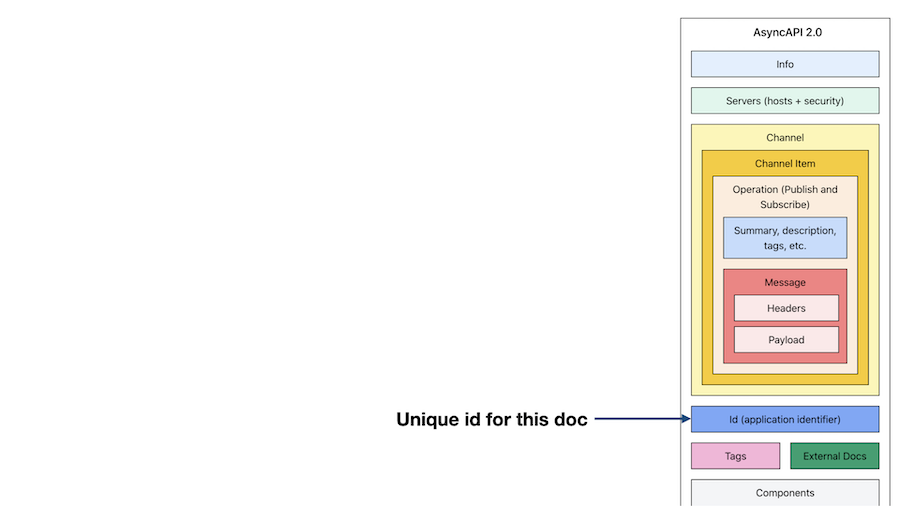

I’ll start with the simplest bit: uniquely identifying your spec.

AsyncAPI recommends using URNs for this, although I have seen some examples that use URLs.

The important thing is that you have an id with a unique string.

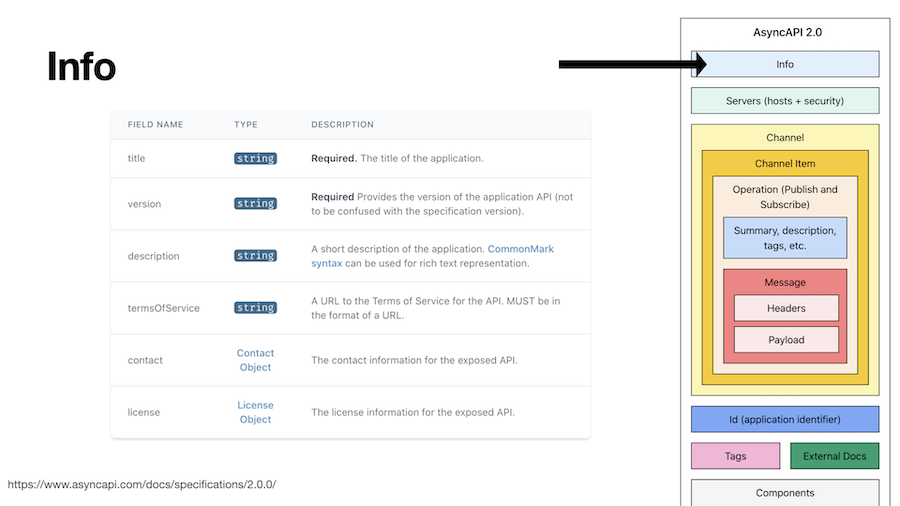

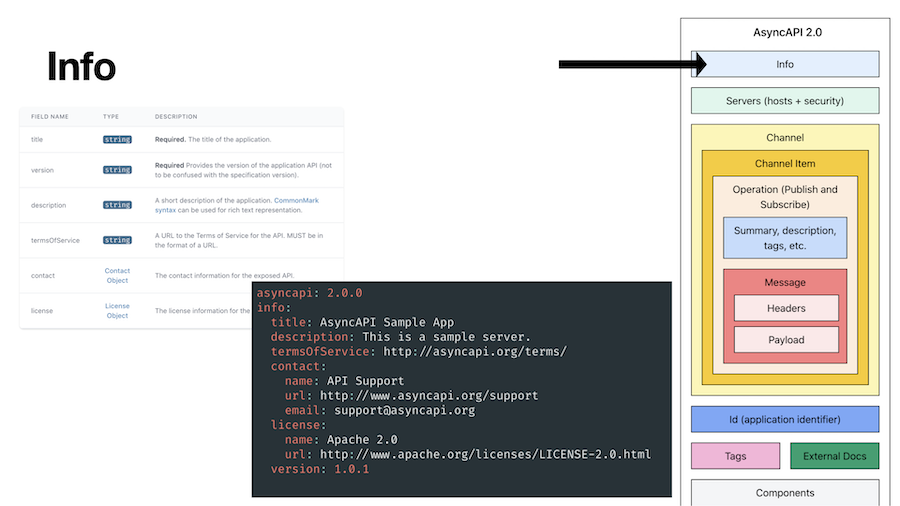

Next, you can provide high-level info about what you’re documenting in this spec.

Aside from the obvious fields like a title, version number and contact details, the interesting bit here is a description. This is a place to capture some context for the use of Kafka being defined. And you can use markdown to include rich-text formatting.

The result can look something like this.

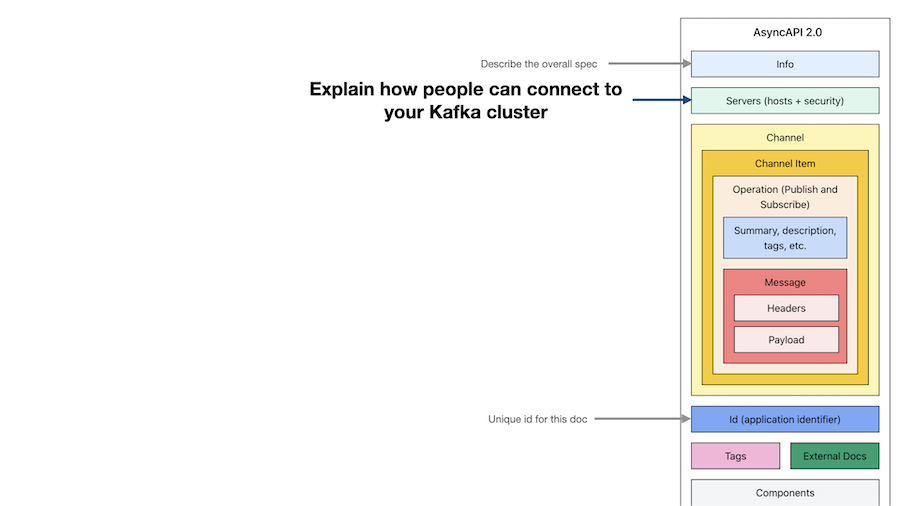

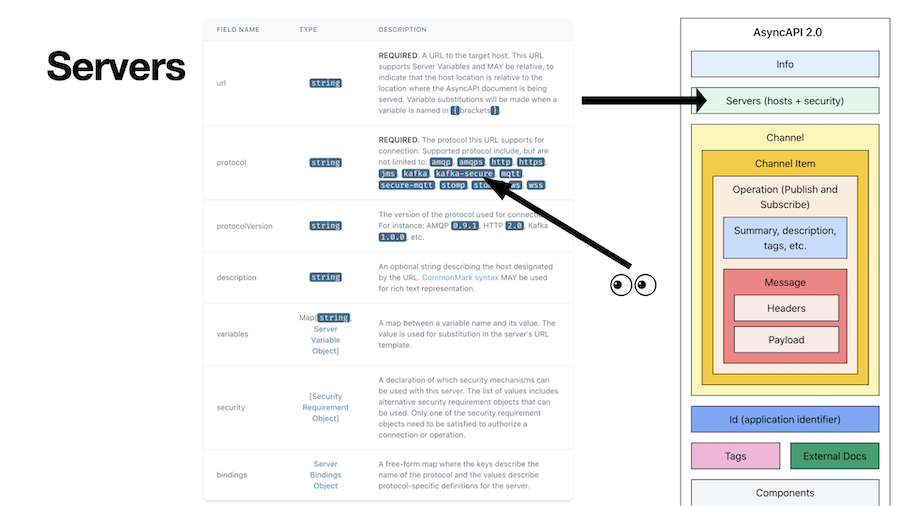

Next, you provide details of your Kafka cluster, so people know how to connect their client applications.

The servers section of the spec is made up of a list of server objects, each defining a Kafka broker and each identified by a unique name.

The most important bit is the URL field, where you provide the connection address for the Kafka broker.

The other bit you need to specify is the protocol. AsyncAPI can be used to document a variety of systems, so here is where you identify that you’re using Kafka.

Notice that there are two flavours of the protocol for Kafka.

If your Kafka cluster doesn’t have auth enabled, then you use the protocol kafka.

If client applications are required to provide credentials, then you identify this by using the protocol kafka-secure.

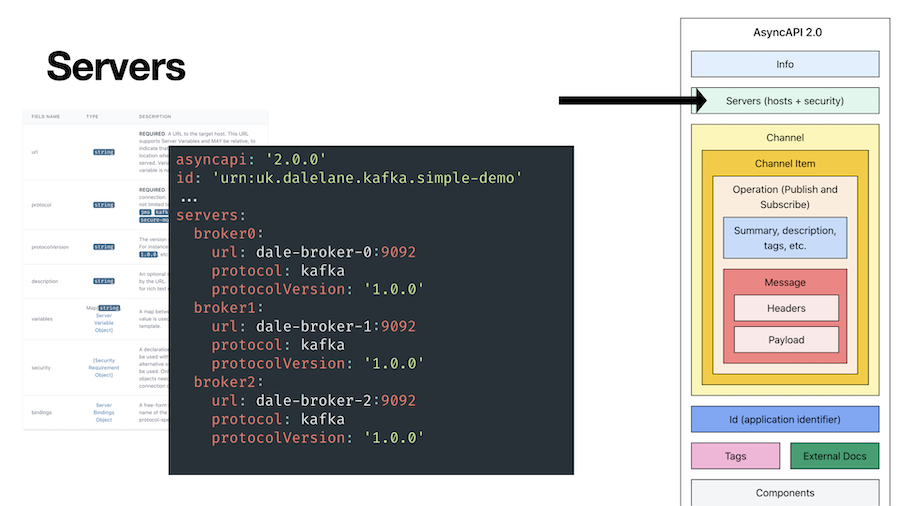

The result looks something like this.

As you’ll have more than one Kafka broker in your cluster, you’ll probably want to identify each of them.

This means that the bootstrap address for Kafka clients wanting to connect should be formed by combining the URLs for each of the server objects. (And this is what AsyncAPI’s Java Spring code generator does.)

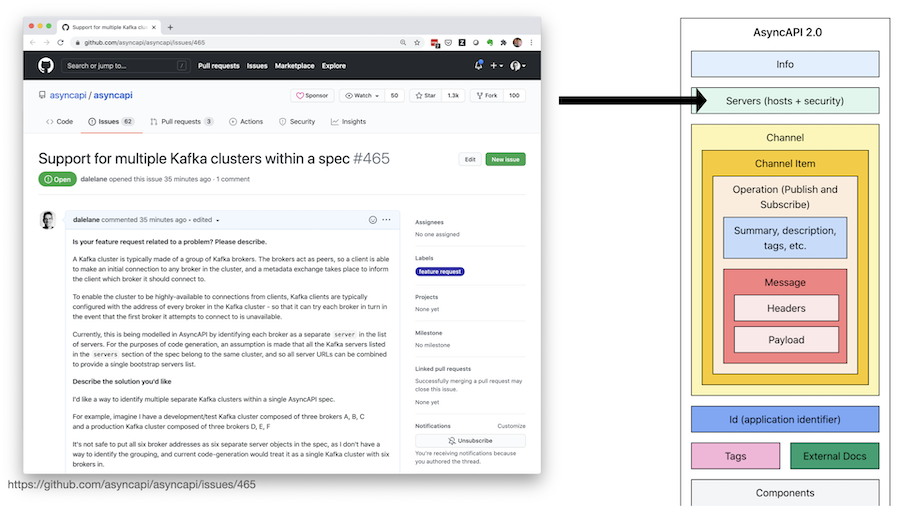

This is a limitation, as it prevents you from including multiple Kafka clusters (such as a production cluster and dev/test/staging clusters) in a single AsyncAPI spec. I think it would help to extend the spec to enable identifying multiple clusters, which is a suggestion I’ve raised with the AsyncAPI community.

In the meantime, you could just list all brokers from all your clusters, and rely on using the description or extension fields to explain which ones are in which cluster. (You would have to be careful of code generators or other parts of the AsyncAPI ecosystem that will misinterpret them as all being members of one large cluster.)

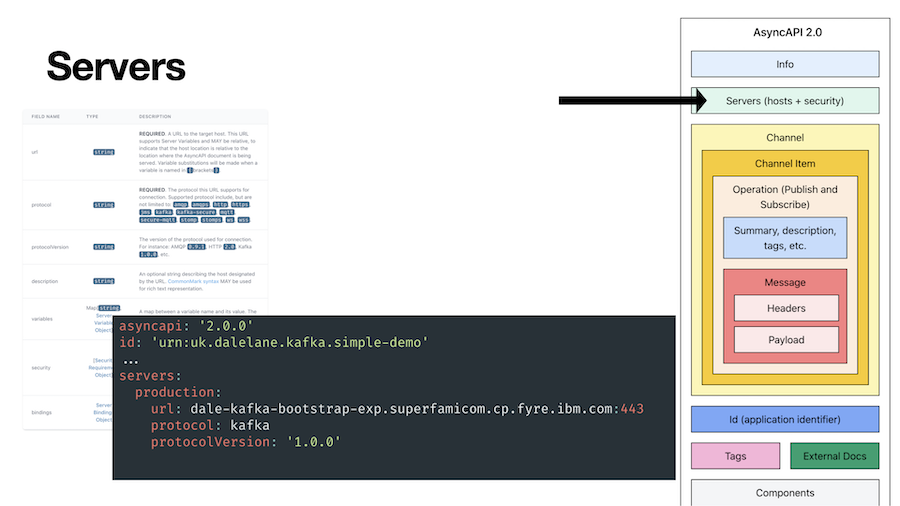

For those of us who are running Kafka in Kubernetes, and fronting it with a single bootstrap service or route that round-robins each broker in the cluster, we can just use that address in a single server object.

The same limitation about multiple clusters will apply though.

You could identify multiple Kafka clusters, each as a separate server object, and rely on naming or description fields to make it clear that these are actually separate clusters. But this wouldn’t be consistent with some existing use of AsyncAPI, or the assumptions in supporting tools in the ecosystem, like the Java Spring code generator.

For now, I think better to avoid doing this.

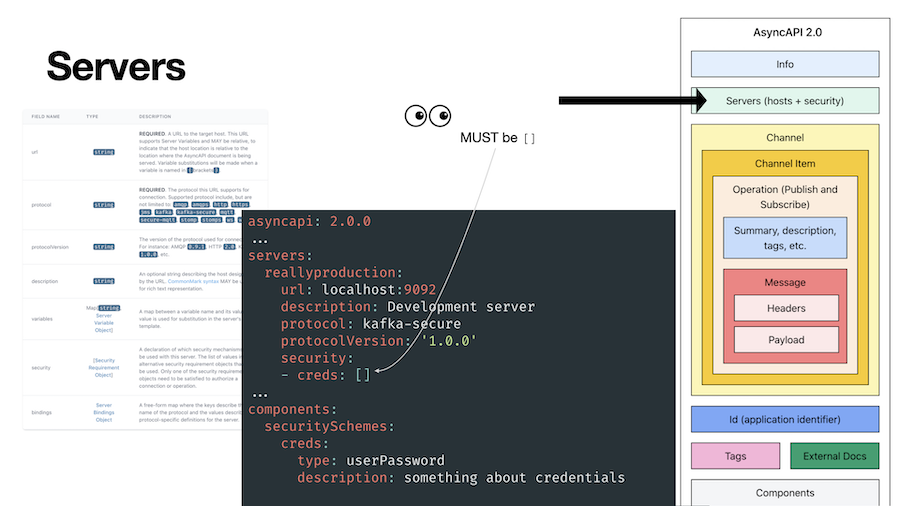

As I mentioned above, if your Kafka cluster is secured, you identify this by specifying kafka-secure as the protocol.

You identify the type of credentials by adding a security section to the server object. The value you put in there is the name of a securityScheme object you define in the components section.

Notice that the contents of the security value in the server object is just []. The important bit is the name, which matches up with the details down in components.securitySchemes.

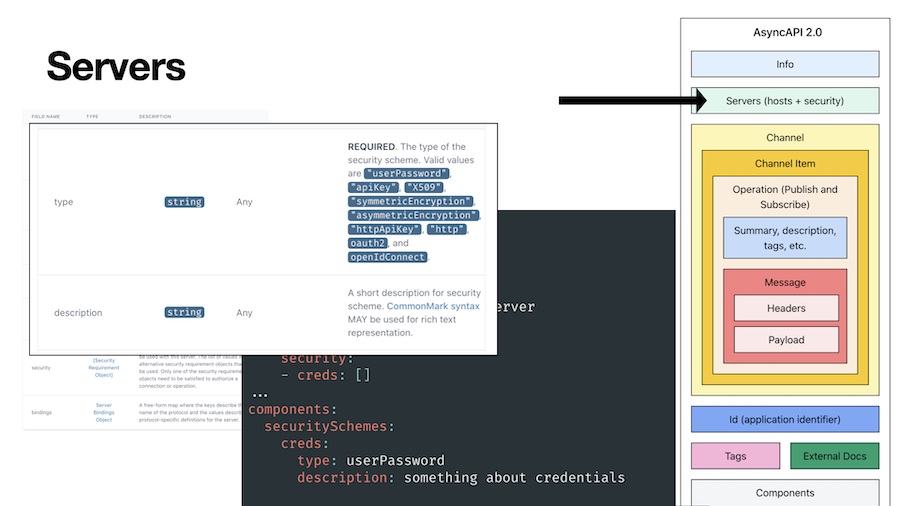

The types of security scheme that you can specify aren’t Kafka-specific, so the best option is to choose the value that describes your type of approach to security.

For example, if you’re using SASL/SCRAM, that is a username/password-based approach to auth, so you could describe this as userPassword.

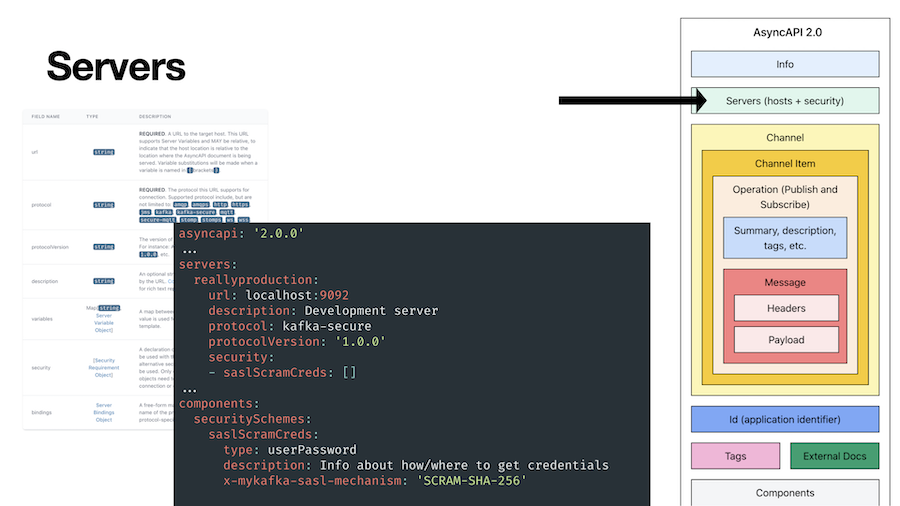

If you want to be more specific about the security options that Kafka clients need to use, then you could explain that in the description field, or you could use extensions to document it.

As with OpenAPI, you can add additional attributes to the spec by prefixing them with x- to identify them as your own extensions to AsyncAPI.

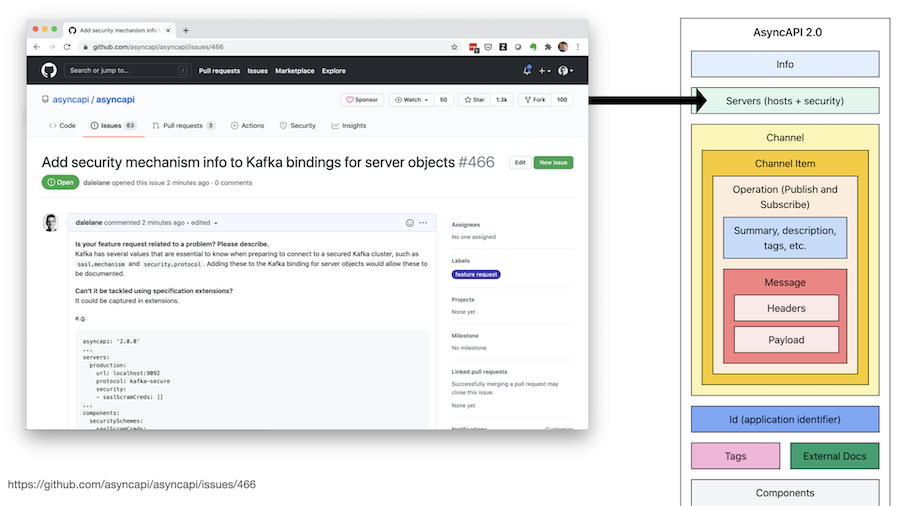

The problem with either of these approaches is that it won’t lead to people documenting these standard aspects of configuring Kafka clients in a consistent way, and would be harder to exploit in things like code generators.

I think it would help to extend the spec to include Kafka-specific security config options, which is a suggestion I’ve raised with the AsyncAPI community.

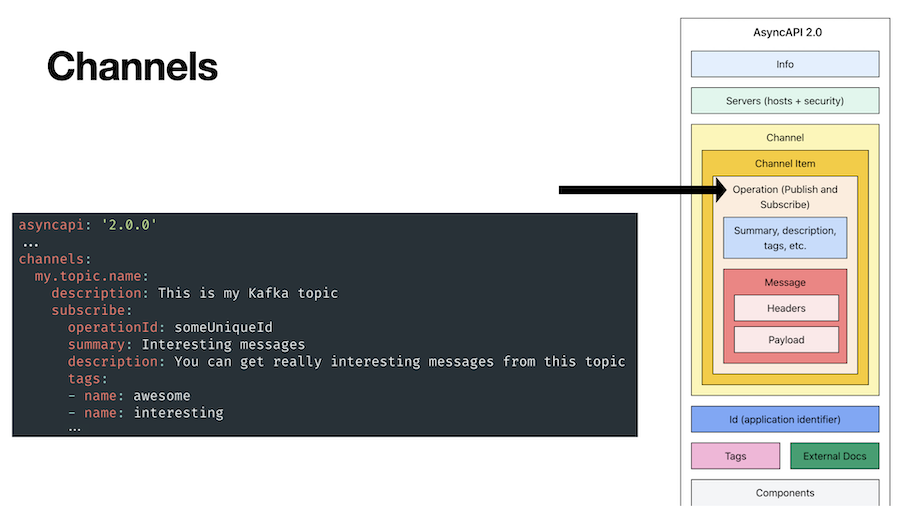

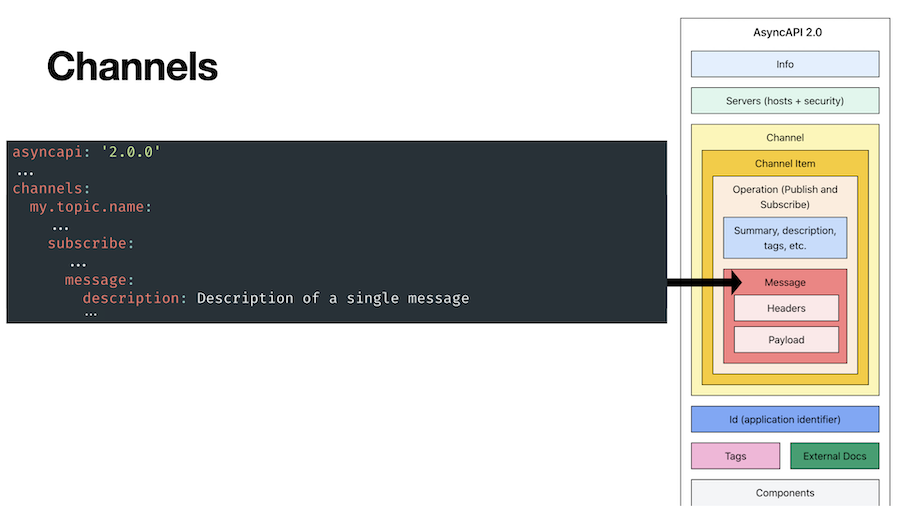

The next thing to do is to identify your Kafka topics.

As I mentioned above, in AsyncAPI you describe your topics as channels.

The channels section is made up of channel objects, each named using the name of your topic.

For each topic, you need to identify the operations that you want to describe in the spec.

As I mentioned above, AsyncAPI describes producing and consuming as publish and subscribe operations.

You can start by describing the operation – giving it a unique id, a short one-line text summary, and a more detailed description (which can include markdown formatting).

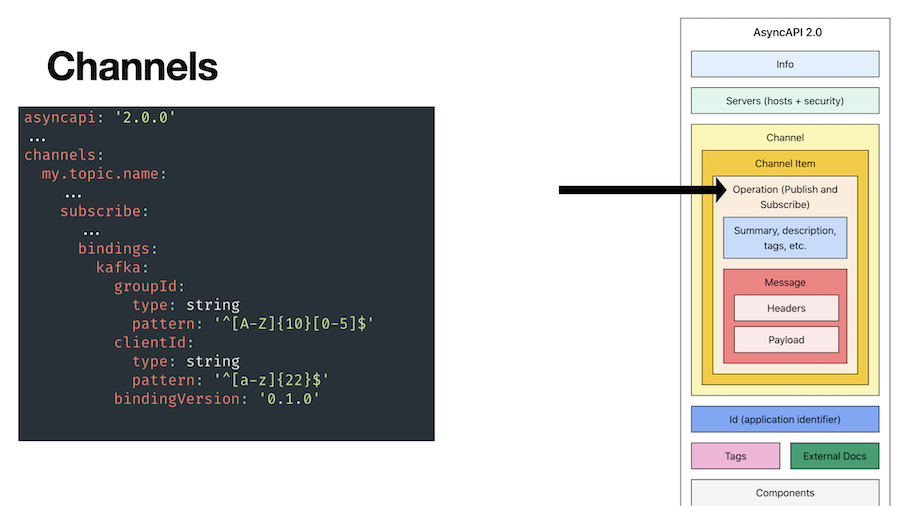

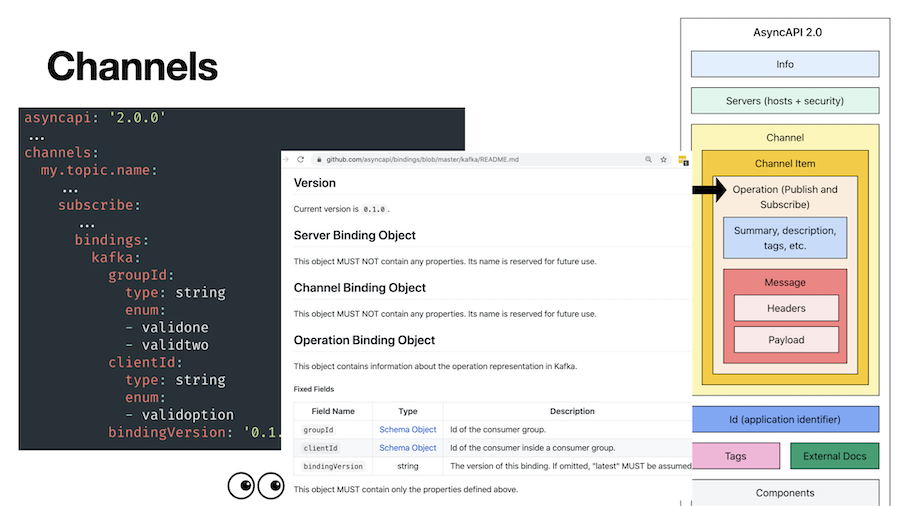

AsyncAPI puts protocol-specific values in sections called bindings.

Next, you can specify the values that Kafka clients should use to perform this operation in a bindings section.

The values you can describe are the consumer group id, and the client id.

If there are expectations about the format of these values, then you can describe them here, such as by using regular expressions.

Alternatively, if there is a discrete set of valid values, then you can enumerate all of them here instead.

Note that these are the only Kafka-specific attributes that are included in the bindings for Kafka operations.

Next, you describe the messages on the topic.

As with all the other levels of the spec, you can provide background and narrative in a description field.

Again, Kafka-specific values go into a bindings section. For messages, the value you can describe is how keys are used in messages on this topic.

You can describe the type – such as identifying whether you are using numeric or string-based keys. You can provide a regex if there is a pattern to how keys are defined. Or if you’re using a predefined, discrete set of keys, you can list them all in an enum.

As before, note that this is the only Kafka-specific attribute that is included in the bindings for Kafka messages.

Next, you document the headers on the messages.

You can list each header as a property of the headers object, and for each header provide a description for what it is for, and the type of data in the value.

There is also space to include a set of examples of what the headers can look like.

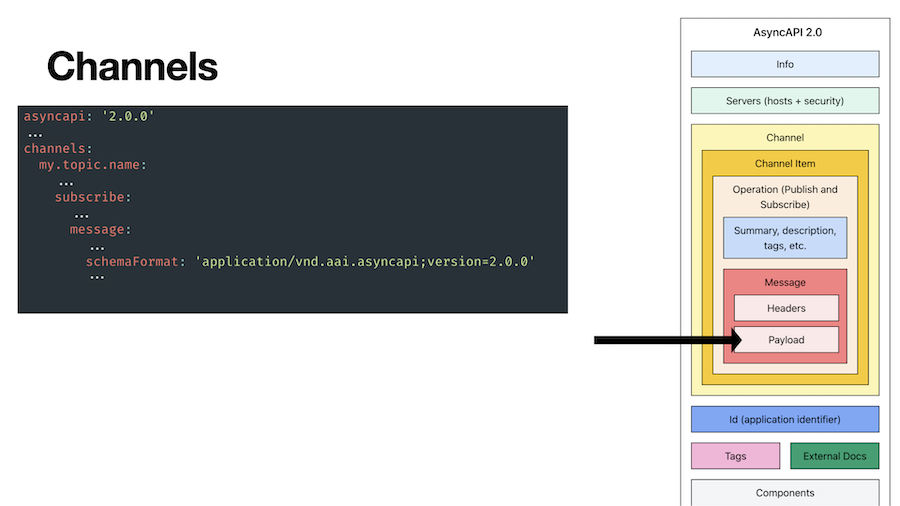

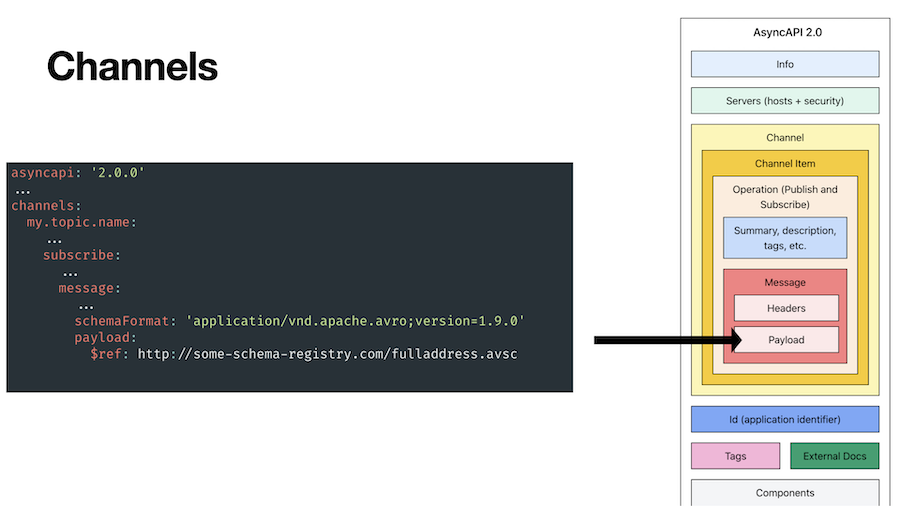

Finally, you describe the message body.

By default, you do this using AsyncAPI’s own schema format.

This means identifying the type of data, any restrictions that apply, and providing some examples.

If messages contain multiple fields, you can identify all of these, and specify which ones are required and which are optional.

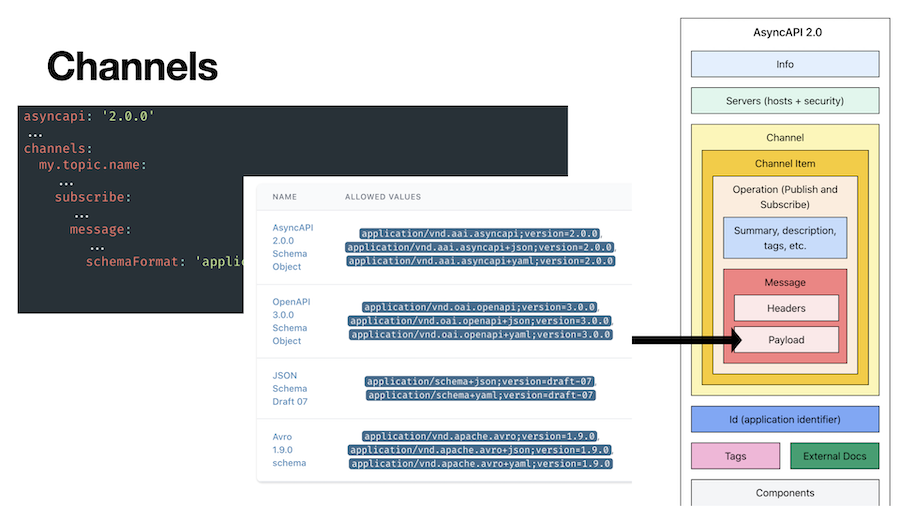

But you don’t have to use AsyncAPI’s own schema format – a few other approaches are supported.

For Kafka users, the most useful of these is likely to be Apache Avro. If you’re using Avro to serialize and deserialize your messages (and you really should), then you can include a reference to your Avro schema.

For example, if your AsyncAPI spec is in a file on a filesystem, you can provide the relative location of the Avro schema file.

Alternatively, you can provide an absolute URL for where the schema is hosted, such as in a schema registry.

Reusing your existing Avro schemas means you don’t need to define the data types for your Kafka payloads multiple times, and the AsyncAPI spec supplements what Avro captures with information about the topics and Kafka clusters.

And that’s it.

I think there is a lot of value in capturing this detail about how you’re using Kafka in a consistent way.

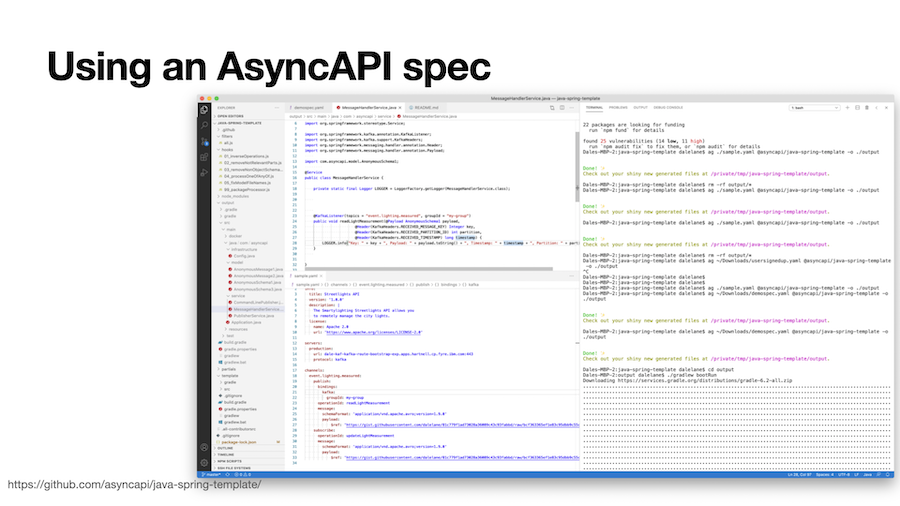

I’ve tried playing with a couple of examples of supporting tools in the ecosystem.

I’ve already mentioned the Java Spring code generation – point it at an AsyncAPI spec, and it generates the source code for a Java app ready to start running against your Kafka cluster, with the connection information already set up.

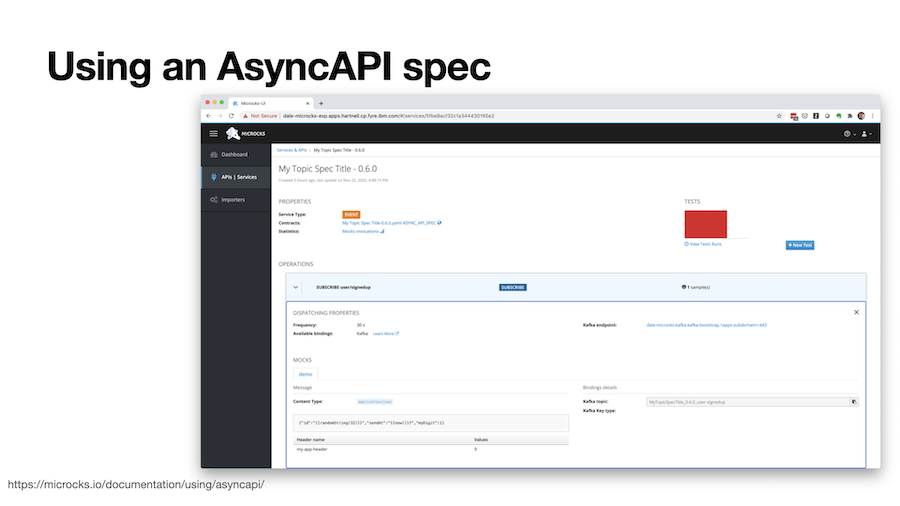

Microcks is another interesting tool. It deploys it’s own Kafka broker, and if you upload your AsyncAPI spec it creates Kafka topics based on the description in the spec, and starts generating and producing mock data to them on a frequency interval that you specify.

It means you can define and document the data you plan to produce to your Kafka topics, and let Microcks set up a topic with a live stream of mock data matching that spec. And that lets you start developing your Kafka consumers against the mock topic without needing to wait until you have a real topic with real data.

I’m sure there is more that we can do with this, but this is an intro to starting to use AsyncAPI to describe your Kafka topics.

For more information, look at asyncapi.com.

Tags: apacheavro, apachekafka, asyncapi, avro, kafka

Is there a tool(SwaggerHub) you would recommend that supports AsyncAPI documentation? And how do we ensure the documentation and implementation are in sync?

You can see a list of tools at

https://www.asyncapi.com/docs/community/tooling

Personally, I’m a big fan of the AsyncAPI plugin for Visual Studio Code.

As for keeping it in sync with your implementation, a lot of that is going to be organisational. AsyncAPI includes a version number, but that won’t enforce anything – it’s up to a team to increment that version number and update code in step.

I’m sure that there is more that tools could do to help with this, though – which is a super interesting idea.

@Dale thanks for this blog, it was really helpful.

By the way could you explain semantics for HTTP on asyncapi spec? Does subscribes mean an HTTP request (mentioning request in bindings) and publish means response (again mentioning it in bindings).

It also could be other way, subscribe as a HTTP response (app’s publish) and publish means

HTTP request (client’s publish).

This fits if I follow semantics mentioned here:

https://asyncapi.io/blog/publish-subscribe-semantics

Or one won’t use asyncapi spec for an API (client-server) as openapi spec is good enough for that thing but can used for webhooks?

And if fr webhooks how would be preferable presentation of spec.

Would be happy if there comes a blog over this as well.