This is the third in a series of blog posts sharing examples of ways to use Mirror Maker 2 with IBM Event Streams.

- Using Mirror Maker 2 to aggregate events from multiple regions

- Using Mirror Maker 2 to broadcast events to multiple regions

- Using Mirror Maker 2 to share topics across multiple regions

- Using Mirror Maker 2 to create a failover cluster

- Using Mirror Maker 2 to restore events from a backup cluster

- Using Mirror Maker 2 to migrate to a different region

Mirror Maker 2 is a powerful and flexible tool for moving Kafka events between Kafka clusters, but sometimes I feel like this can be forgotten if we only talk about it in the context of disaster recovery.

In these posts, I want to inspire you to think about other ways you could use Mirror Maker 2. The best way to learn about what is possible is to play with it for yourself, so with these posts I’ll include a script to create a demonstration of the scenario.

For this third post, I’ll look at using Mirror Maker to create logical topics shared across multiple regions.

Imagine that you are running applications in multiple different regions.

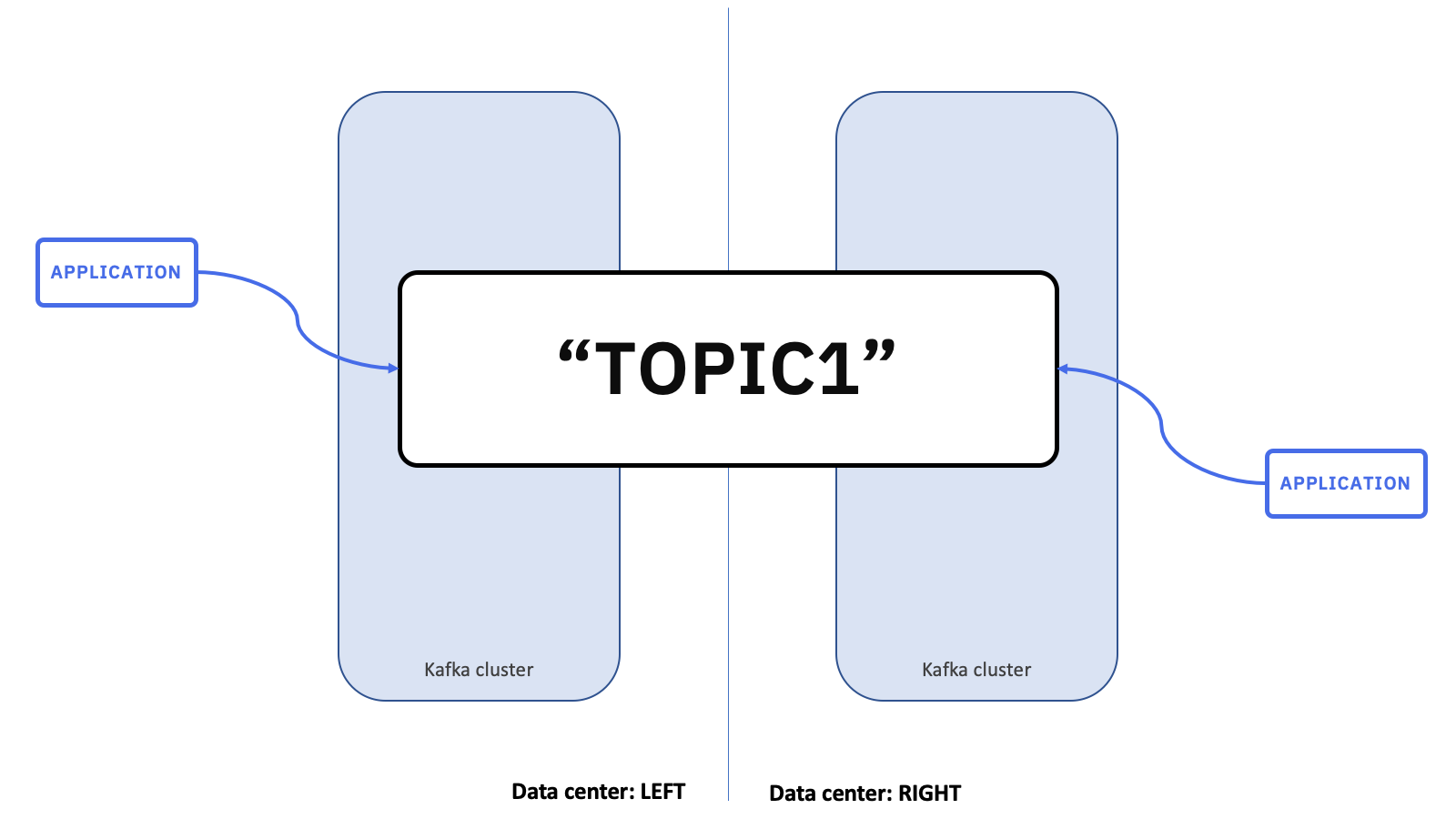

In this pattern, Mirror Maker can be used to create a conceptual, logical topic, which is spread across two separate Kafka clusters. This topic is locally accessible to client applications running in either region.

Under the covers, these are actually two pairs of topics.

Mirror Maker is mirroring each topic to the other region.

In these diagrams, there is a TOPIC1 topic in the “LEFT” region, which MirrorMaker 2 is replicating to a copy called LEFT.TOPIC1 on the “RIGHT” region.

And the same going in the opposite direction. There is a TOPIC1 topic on the “RIGHT” region, which MirrorMaker 2 is replicating to a copy called RIGHT.TOPIC1 on the “LEFT” region.

This naming convention (prefixing the logical topic name with the name of where the canonical copy is hosted) helps to keep everything organized.

Applications running in each region send messages to the canonical topic hosted by their local Kafka cluster. This means that producers running in the “LEFT” region produce messages to the TOPIC1 topic hosted in the Kafka cluster in the “LEFT” region.

And producers running in the “RIGHT” region produce messages to the TOPIC1 topic hosted in the “RIGHT” Kafka cluster.

With this pattern, applications receive and process all messages produced in all regions. To do this, consuming applications consume from both copies of the topic in their local Kafka cluster. They receive messages produced from applications in the same region as them, as well as messages produced in the remote regions.

Mirroring is an efficient way of enabling conceptual distributed topics that are resilient to the loss of a region.

This is a highly resilient architecture. Providing a single logical conceptual topic that spans both clusters and regions means producing and processing messages on that conceptual topic is resilient even in the event of the loss of a whole region.

In environments where many applications in multiple regions are consuming all events from applications in other regions, there are also significant network infrastructure benefits from only transferring events between regions once, instead of once for each application.

Applications benefit as well, through the latency benefits of communicating with a local Kafka cluster.

Using Mirror Maker 2 is a good fit where events are generated in multiple locations, and need to be processed by applications running in multiple remote locations. This is particularly beneficial where the applications are sensitive to latency or where cross-region network traffic is considered expensive.

Demo

For a demonstration of this, I created a three-region version of this pattern:

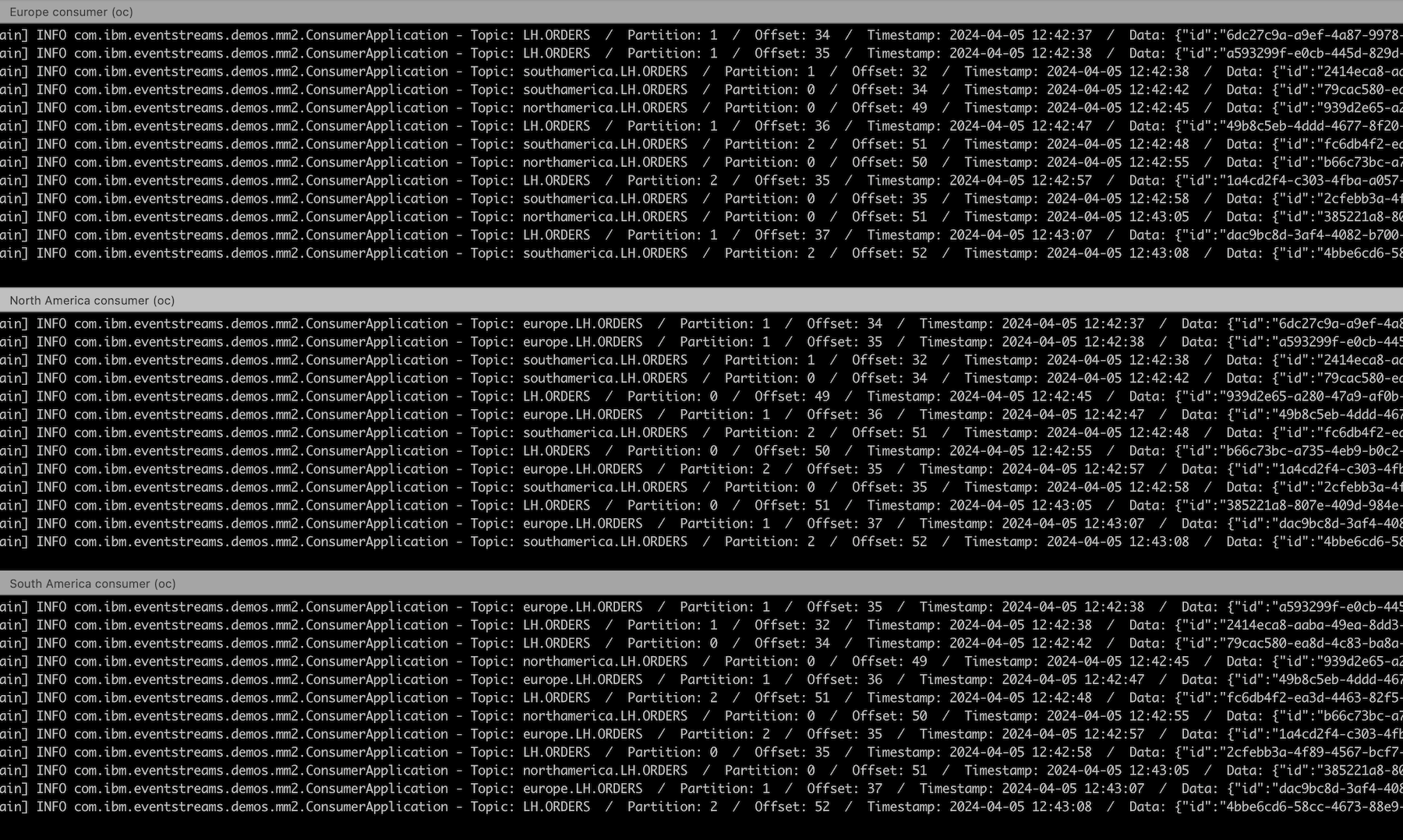

Three Kubernetes namespaces (“north-america”, “south-america”, “europe”) represent three different regions. An Event Streams Kafka cluster is created in each “region”.

A producer application is started in each region, regularly producing randomly generated events, localised to their region, and themed around a fictional clothing retailer, Loosehanger Jeans.

A consumer application is started in each region. These consume all events produced in all regions.

To create the demo for yourself

There is an Ansible playbook here which creates all of this:

github.com/dalelane/eventstreams-mirrormaker2-demos/blob/master/04-shared-aggregate/setup.yaml

An example of how to run it can be found in the script at: setup-04-shared-aggregate.sh

This script will also display the URL and username/password for the Event Streams web UI for all three regions, to make it easier to log in and see the events.

Once you’ve created the demo, you can run the consumer scripts to see that all consumers receive all events from all regions.

consumer-northamerica.sh– to see events received by the consumer running in the “North America region”consumer-southamerica.sh– to see events received by the consumer running in the “South America region”consumer-europe.sh– to see events received by the consumer running in the “Europe region”

(Once you’ve finished, the cleanup.sh script deletes everything that the demo created.)

How the demo is configured

The Mirror Maker configs can be found here:

mm2-na.yaml(Mirror Maker in the “North American region”)mm2-sa.yaml(Mirror Maker in the “South American region”)mm2-eu.yaml(Mirror Maker in the “Europe region”)

The specs are commented so these are the files to read if you want to see how to configure Mirror Maker to satisfy this kind of scenario.

I’ve only talked here about how MM2 is moving the events between regions, but if you look at the comments in the mm2 specs, you’ll see that it is doing more than that. For example, it is also keeping the topic configuration in sync. Try that for yourself. Modify the configuration of one of the topics in the “Europe region” and then see that change reflected in the corresponding topics in the North America and South America “regions”.

More scenarios to come

I’ve still got some more ideas of scenarios that show off the benefits of the checkpoint connector, so I’ll add more posts soon.

Tags: apachekafka, ibmeventstreams, kafka