This is the fifth in a series of blog posts sharing examples of ways to use Mirror Maker 2 with IBM Event Streams.

- Using Mirror Maker 2 to aggregate events from multiple regions

- Using Mirror Maker 2 to broadcast events to multiple regions

- Using Mirror Maker 2 to share topics across multiple regions

- Using Mirror Maker 2 to create a failover cluster

- Using Mirror Maker 2 to restore events from a backup cluster

- Using Mirror Maker 2 to migrate to a different region

Mirror Maker 2 is a powerful and flexible tool for moving Kafka events between Kafka clusters.

For this fifth post, I’ll look at using Mirror Maker to maintain a backup of your Kafka events, and to be able to restore from that backup.

This is more complex than the previous posts as there are multiple stages involved. For each stage, I’ll explain the intent and share the demo script I’ve created to let you try this for yourself.

Initial setup

Overview

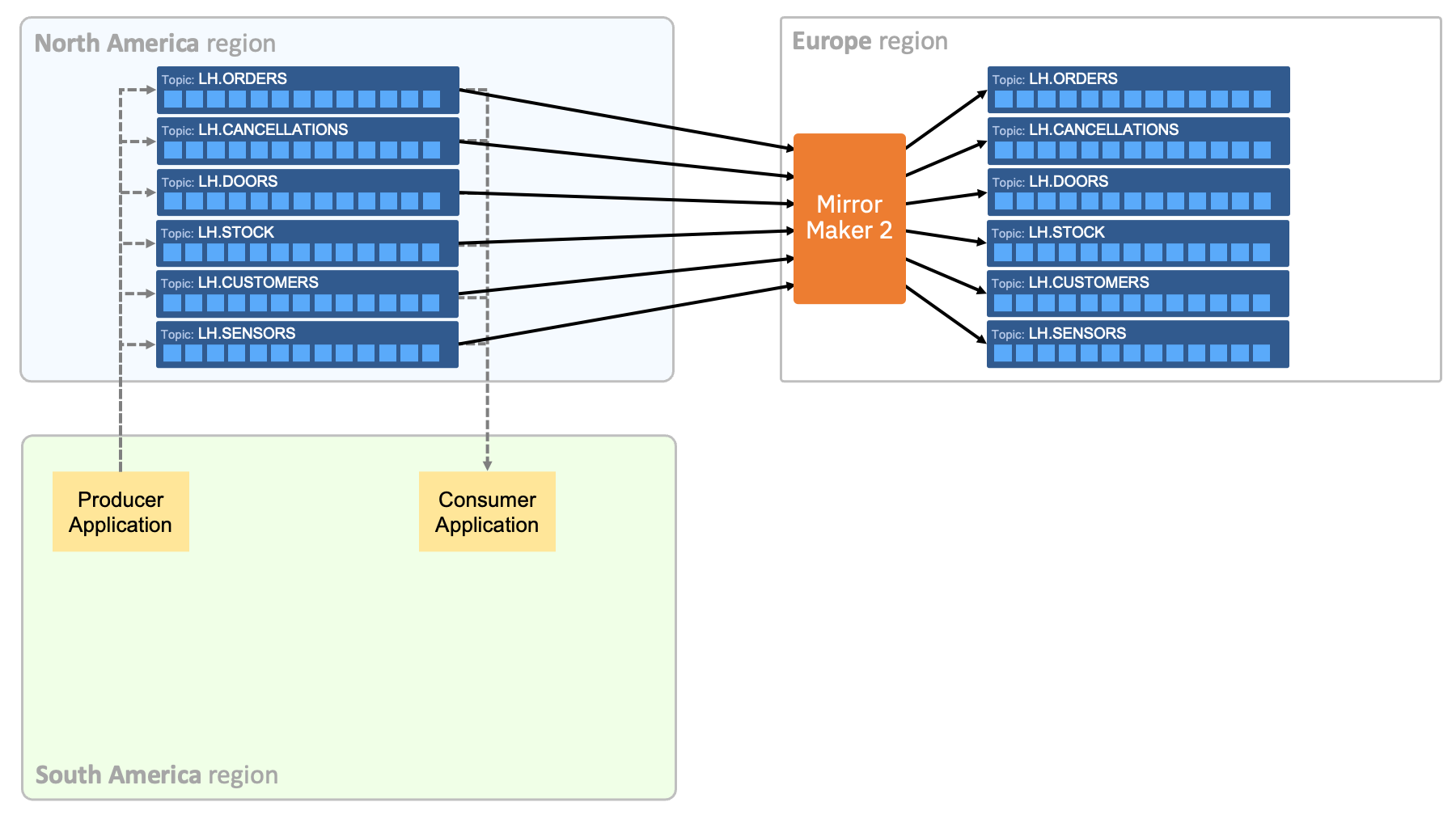

The goal for this stage is to create this:

Three Kubernetes namespaces (“north-america”, “south-america”, “europe”) represent three different regions.

The “North America region” represents the primary, active environment for the Kafka cluster.

Applications run in the “South America region” and produce and consume from topics in the Kafka cluster.

As with previous posts, the producer application is regularly producing randomly generated events, themed around a fictional clothing retailer, Loosehanger Jeans.

In the background, Mirror Maker 2 is maintaining a backup of the Kafka cluster in the “Europe region”.

To create the demo for yourself

There is an Ansible playbook here which creates this stage:

github.com/dalelane/eventstreams-mirrormaker2-demos/blob/master/06-backup-restore/initial-setup.yaml

An example of how to run it can be found in the script at: setup-06-backup.sh

This script will also display the URL and username/password for the Event Streams web UI for North America and Europe regions, to make it easier to log in and see the events.

Once you’ve created the demo, you can run the consumer-southamerica.sh script to see the events being received by the consumer application in the “South America region”.

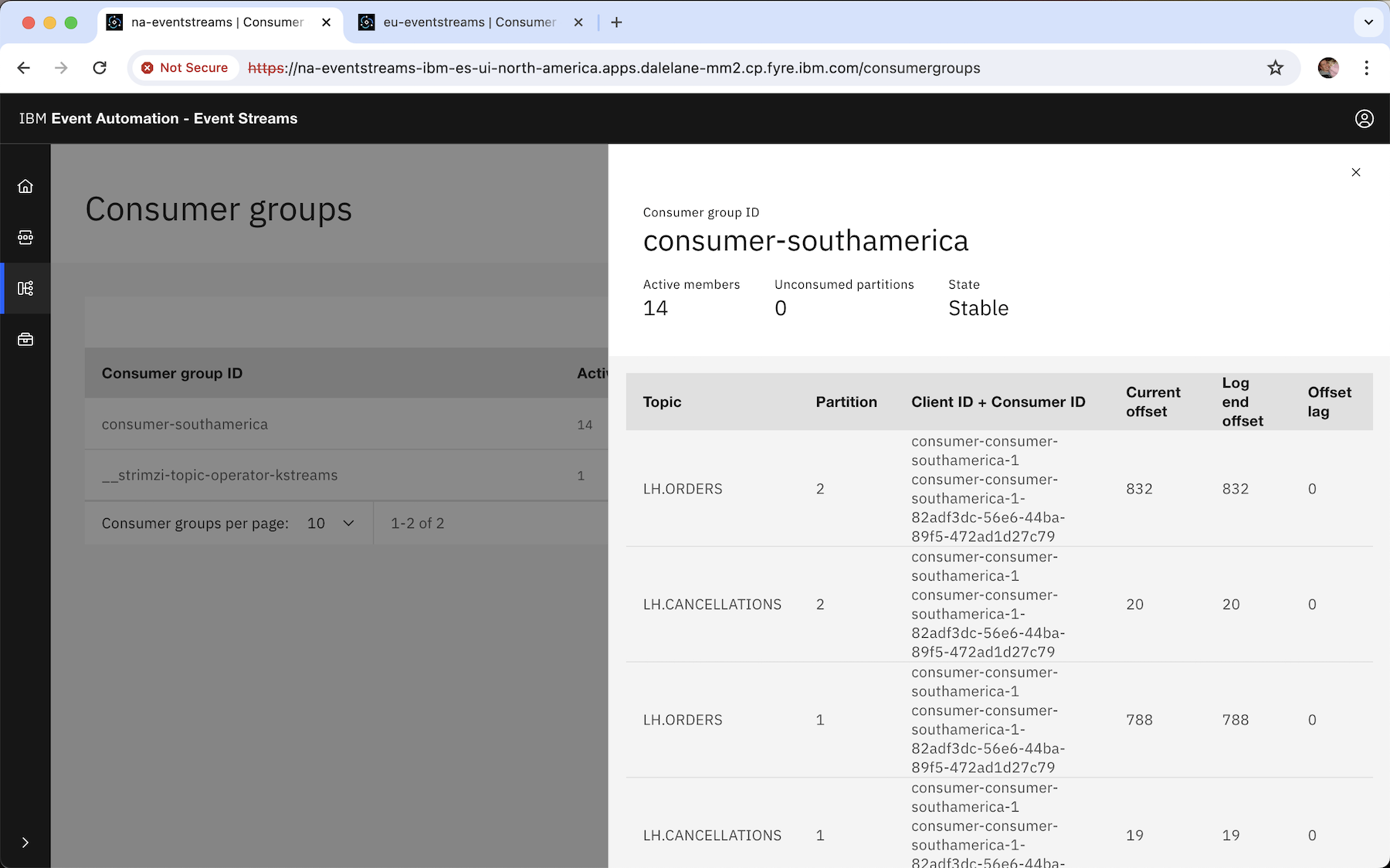

If you log in to the Event Streams web UI for the active cluster in the “North America region”, you will see information about the consumer application listed there.

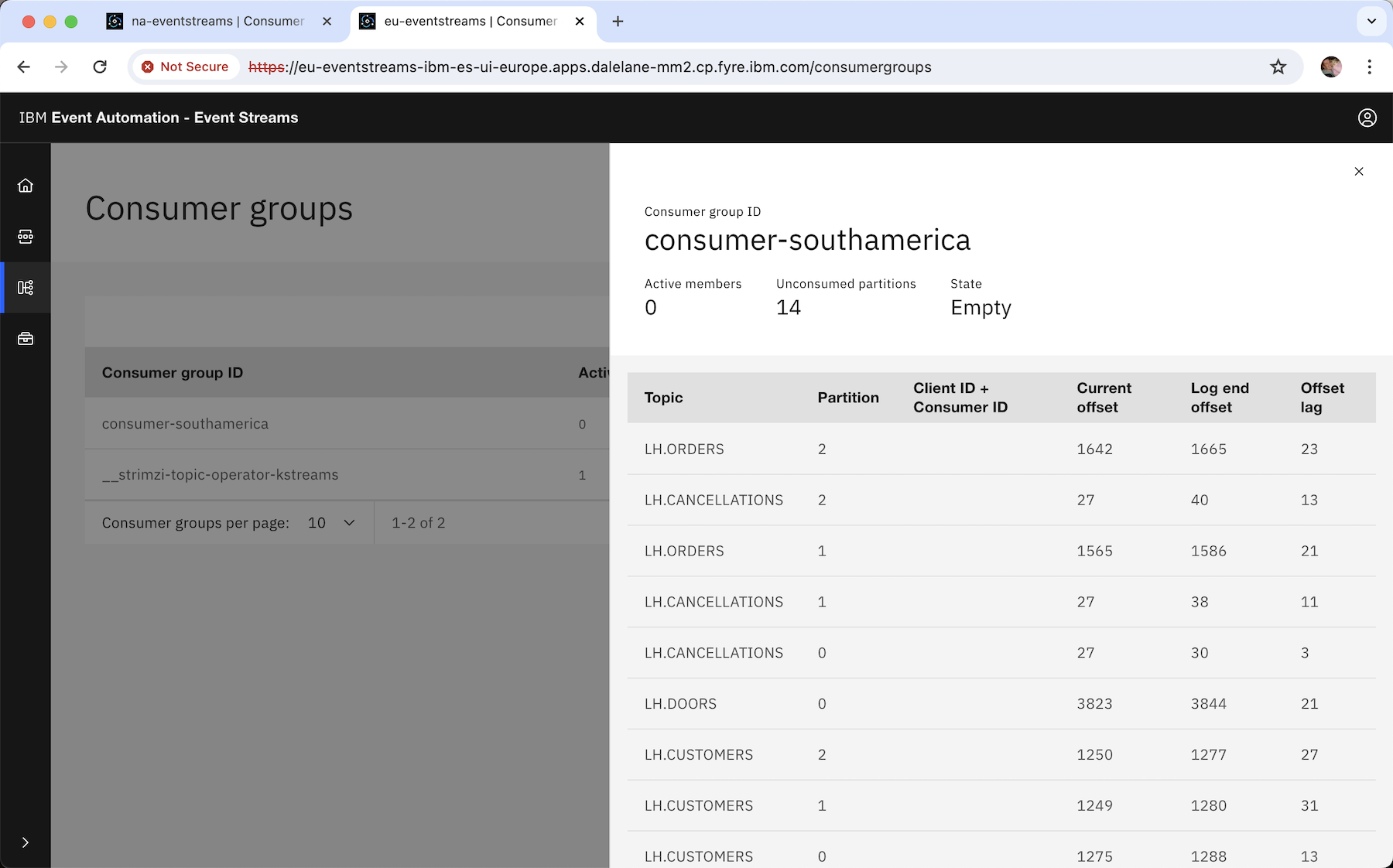

If you log in to the Event Streams web UI for the passive cluster in the “Europe region”, you will also see the consumer application listed there as well.

This isn’t a separate application. There is no consumer running connected to the “Europe region”.

For this scenario, Mirror Maker is backing up the state of consumer applications as well as topics – this is a mirrored record of the same application consuming from the “North America region”.

(Note: There is a configurable lag in backing up application offsets, as I discussed in the post on Using Mirror Maker 2 to create a failover cluster.)

How the demo is configured

The Mirror Maker config can be found here: mm2-backup.yaml.

The spec is commented so that is the main file to read if you want to see how to configure Mirror Maker to maintain a backup.

As there are no applications actively using the backup cluster, it is important to be able to monitor it, to have confidence that Mirror Maker is still mirroring the topics. To illustrate one way that this can be done, the Mirror Maker 2 spec for this demo includes a heartbeat connector, which will regularly produce heartbeat events to the backup cluster.

Loss of the primary cluster

Overview

In this stage, the primary region is lost, resulting in the applications losing the ability to produce or consume.

To create the demo for yourself

In this demo, Kubernetes namespaces are being used to represent regions. To represent the total loss of the “North America region”, delete the north-america namespace.

oc delete project north-america

Recreating the primary cluster from the backup

Overview

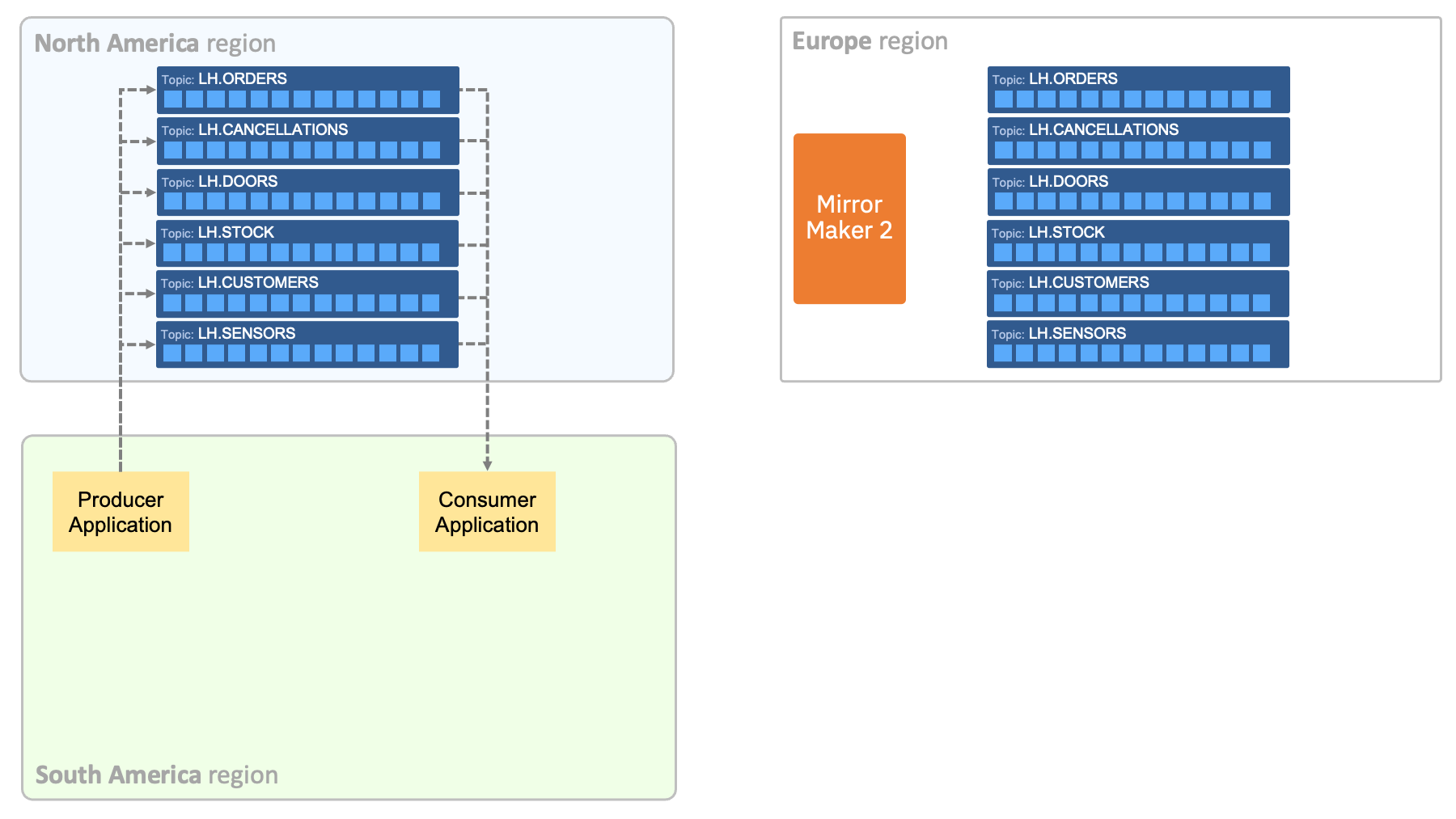

The goal for this stage is to recreate the primary cluster.

The Kafka cluster and topic definitions are recreated using the same specifications used to create the original cluster.

Usernames/passwords and truststore certificates are restored from a backup.

The events on the Kafka topics are restored by Mirror Maker 2, mirroring in the opposite direction from the backup cluster.

The producer and consumer applications are administratively paused, so that they don’t interfere with the restore process.

To create the demo for yourself

There is an Ansible playbook here which creates this stage:

github.com/dalelane/eventstreams-mirrormaker2-demos/blob/master/06-backup-restore/recreate-from-backup.yaml

An example of how to run it can be found in the script at: setup-06-restore.sh

This script will again display the URL and username/password for the Event Streams web UI for North America and Europe regions, to make it easier to log in and see how the restore process is proceeding.

Mirror Maker won’t automatically stop once the restore has finished, so you need to identify when it has completed by comparing the topics on backup and primary cluster.

How long this will take will depend on how many events there are to restore – which is mostly determined by how long you left the demo running in the first stage.

Remember to compare message contents, keys, and timestamps – not offsets – as offsets will not be consistent across clusters. (Consumer offsets are automatically rewritten when mirrored to take the difference into account).

Wait for this to finish restoring all of the topics before continuing to the next stage.

How the demo is configured

The Mirror Maker config can be found here: mm2-restore.yaml.

The spec is commented so that is the main file to read if you want to see how to configure Mirror Maker to restore from a backup cluster.

Resuming applications

Overview

The goal for this stage is to allow the producer and consumer applications to resume what they were doing before the primary region was lost.

Once Mirror Maker 2 has finished restoring all of the events from the backup cluster, it should be removed (together with the associated resources, such as topics it uses to maintain state).

After the Mirror Maker 2 restore job has been completely removed, the applications can resume from where they were up to, approximately, before the primary region was removed.

To create the demo for yourself

There is an Ansible playbook here which creates this stage:

github.com/dalelane/eventstreams-mirrormaker2-demos/blob/master/06-backup-restore/resume-applications.yaml

An example of how to run it can be found in the script at: setup-06-resume.sh

Once you’ve done this, you can run the consumer-southamerica.sh script to see the events being received again by the consumer application in the “South America region”.

You should see that it didn’t need to start from the beginning of the topic, because consumer offsets were restored together with the topic events.

Setting up a new backup

Overview

The final stage is to revive the backup Mirror Maker 2 job, in case the “North America region” is lost again.

Whether this should be done before or after the applications are resumed depends on the priorities for the solution.

Resuming applications first has the benefit of minimising the downtime for the applications – allowing them to resume processing as soon as the primary cluster has been restored. However, it comes with the small risk of data loss if the “North America region” is immediately lost again.

Setting up the new backup first reduces this small risk, but comes at the cost of an additional delay before applications can resume their processing.

To create the demo for yourself

There is an Ansible playbook here which creates this stage:

github.com/dalelane/eventstreams-mirrormaker2-demos/blob/master/06-backup-restore/recreate-from-backup.yaml

An example of how to run it can be found in the script at: setup-06-setup-new-backup.sh

Tags: apachekafka, ibmeventstreams, kafka