We’ve been running a virtual event this week to explain the capabilities of IBM’s Cloud Pak for Integration.

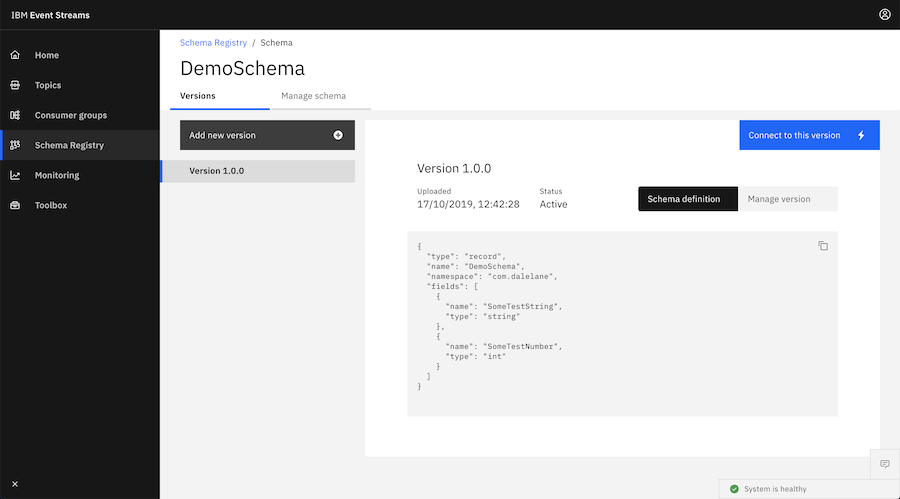

One of these is Event Streams, so I gave an overview of the Event Streams Operator.

But what it really reminded me is that I miss going to conferences and tech events. I don’t want to sound ungrateful for what I’m sure has been a huge amount of work for event organisers in the pivot to online events. It’s great that we can still do events at all, and that organisers are still trying out ways to make it interactive, to enable panels and Q&A sessions.