Ninety-nine percent of the time, I think of Strava as a running app. But every now and then, I do something a bit different with it.

Here are a few examples!

Record your way through a maze

When we explored the maze at Wildwood, Strava was a fun way to see just how lost we got.

Being able to see how many times we went round the same sections of the maze on an interactive map adds a great layer to the experience.

This is a great use of Strava… you just need to find a large outdoor maze.

link – Strava activity

Run on a moving surface

We were on a ship with a running track last week, so I thought this was another chance to do something weird with Strava.

My plan was to run laps of the ship deck while it was at sea, and use that to draw a cool spiral GPS trace with each lap’s GPS location slightly offset from the last.

I massively overestimated how fast I run compared with the speed of a ship.

My GPS trace was essentially a straight line. The waves in the line reflect the slight difference between when I was running in the same direction as the ship to when I was running the opposite, for each short lap.

The resulting stats are entertainingly ridiculous. I love that Strava was suspicious of my ability to run sub-3 minute miles.

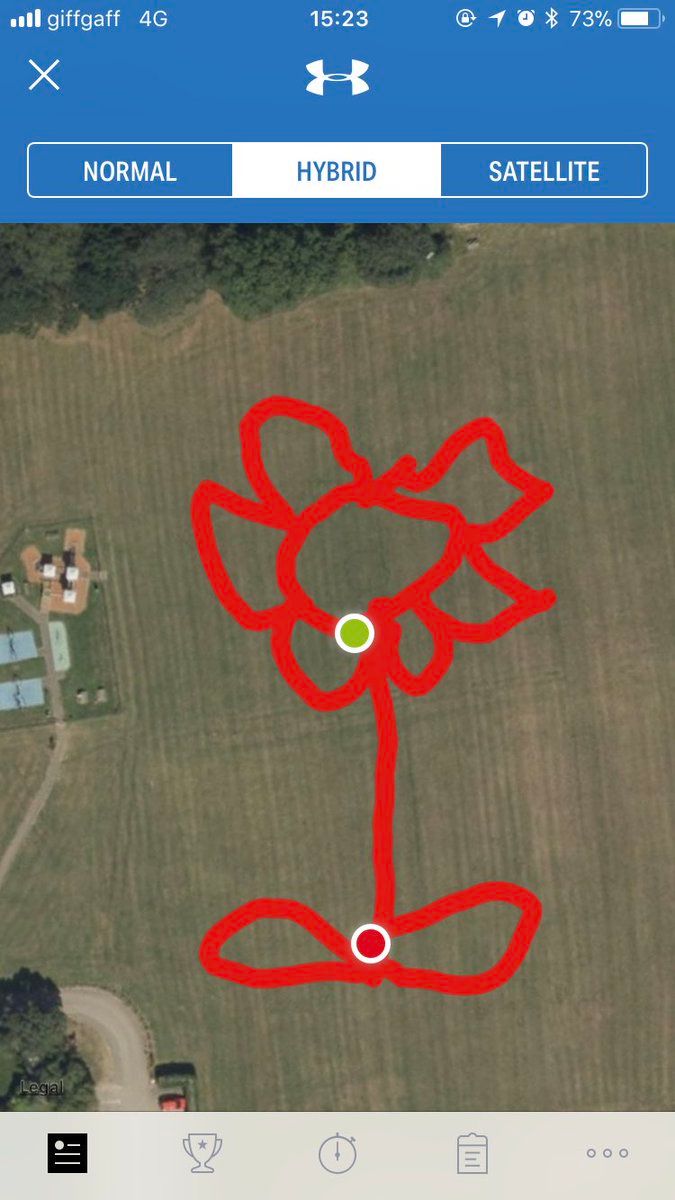

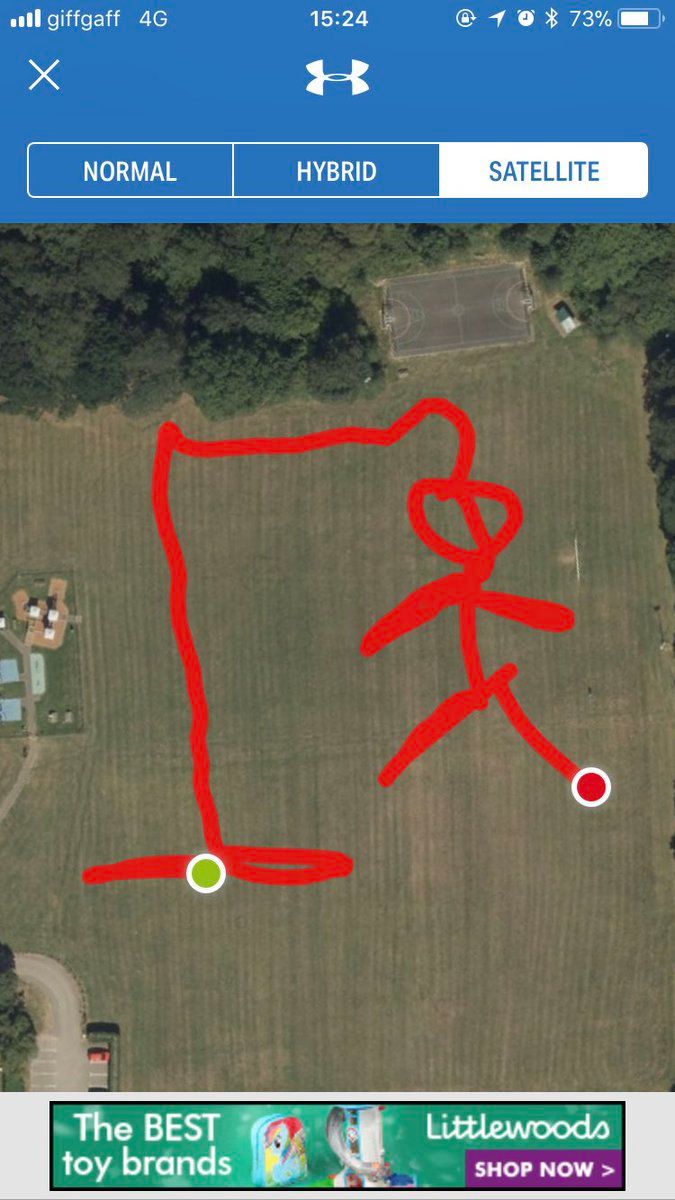

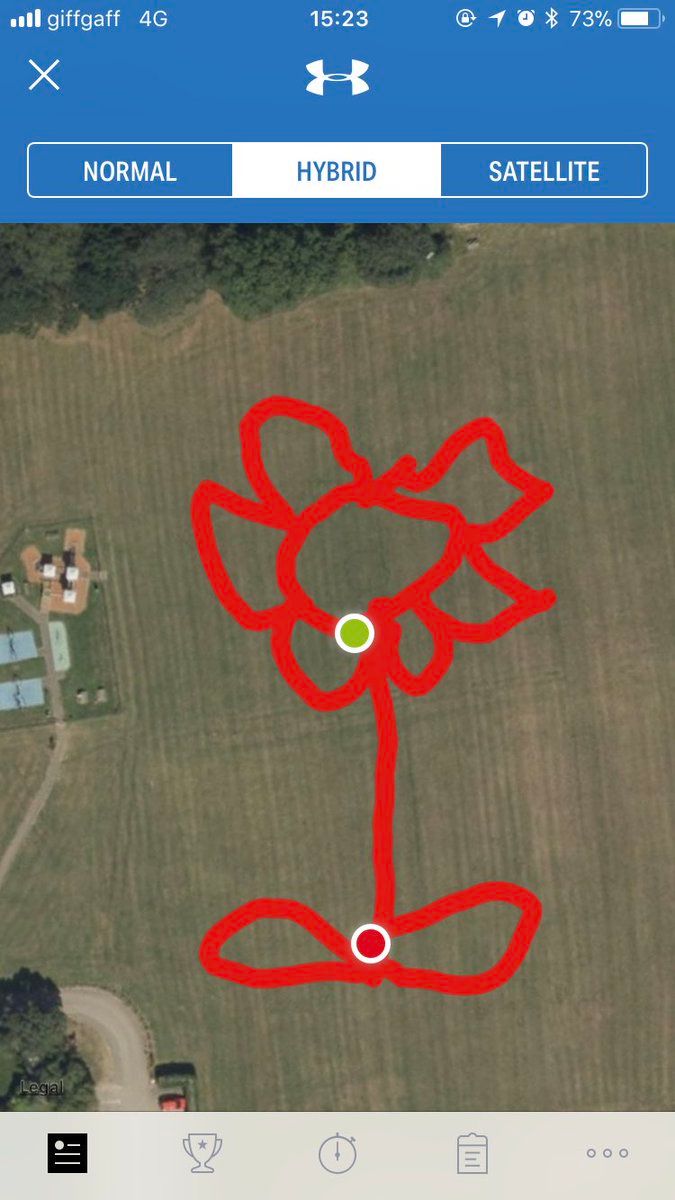

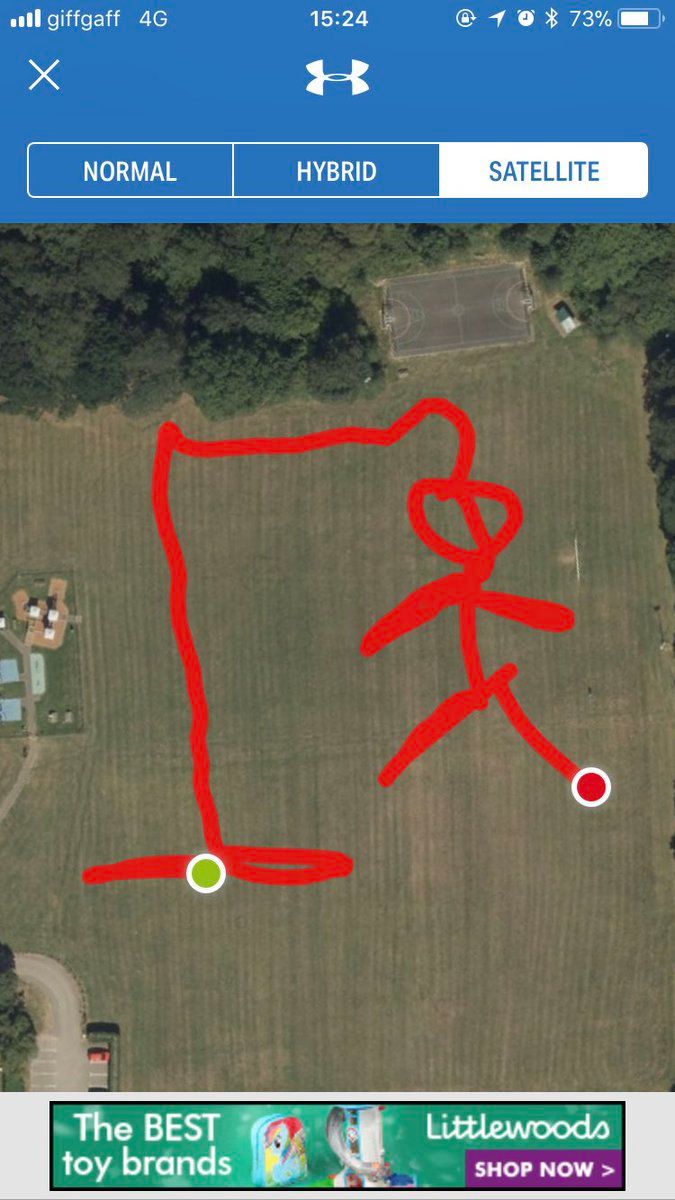

Draw pictures

This is the most common “something weird with Strava” idea I’ve seen people try: find a field and use GPS as a massive virtual Etch A Sketch.

Our local park is ideal for this.

link – not actually Strava, but similar enough!

geo-tagged photos

We stumbled upon a ceramic tile mosaic styled to look like 8-bit video game pixel art. It was just on a random wall, with nothing announcing, explaining, or sign-posting it.

After accidentally finding a second different mosaic nearby, I decided to spend an evening finding more!

I took pictures of each mosaic.

With Strava, a random collection of photos becomes an annotated interactive map, showing where each photo was taken and where each mosaic is.

(I’ve since learned that these are the work of a street artist called Invader – thanks, Paul!).

link – Strava activity

What else?

If I played sports, I’d try recording that – I think a trace of where you’ve run during football sounds like a fun idea.

What other uses are there for a GPS trace?